- Towards AGI

- Posts

- Why GenAI Isn't the Silver Bullet for Hedge Fund Performance?

Why GenAI Isn't the Silver Bullet for Hedge Fund Performance?

The Limits of GenAI in Trading.

Here is what’s new in the AI world.

AI news: Griffin's Report on GenAI's Shortcomings in Financial Markets

Hot Tea: Is Your Leadership Obsolete?

Open AI: 90% Accurate Cerebral Palsy Detection from Yandex AI

OpenAI: OpenAI Blocks Sora from Creating MLK Videos

Hitachi's HARC Agents Bring Reliability to Agentic AI

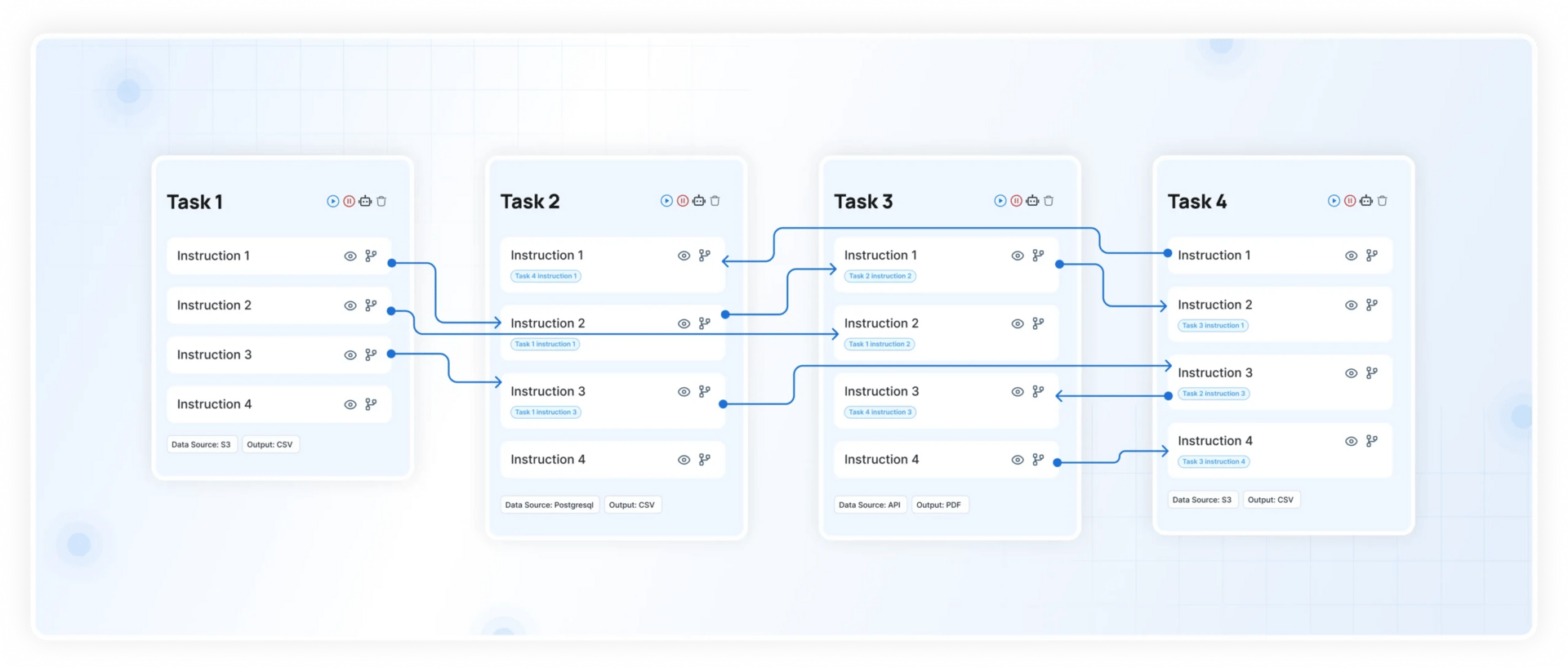

On September 10, 2025, Hitachi Digital Services launched HARC Agents, an enterprise platform that accelerates the deployment of trusted, mission-critical Agentic AI by 30%.

The platform combines four key services, including a new library of over 200 pre-built agents spanning Industrial AI, Operations AI, Engineering AI, Analytical AI, Security AI, and Cloud AI, and a new Agent Management System for unified observability and control.

At in-person event “From Pilots to Autonomous Operations” conducted by Hitachi Digital Services, Roger Lvin, CEO, highlighted the platform delivers operationalized AI that scales and drives real business value, while Premkumar (Prem) Balasubramanian, CTO, emphasized while easy for anybody to build PoC it requires robust engineering and devops at scale to deploy AI production securely with reliable foundation that is deeply integrated across IT and OT environments.

The platform is basked by stellar leadership of executives like Duncan Mears, SVP and Patrick Corcoran, Hitachi Digital Services Champion, leveraging Hitachi's decades of system integration and AI expertise to ensure enterprises can confidently harness AI at scale.

Griffin Says GenAI Fails to Help Hedge Funds Beat the Market

In a significant commentary on the current state of artificial intelligence in high finance, Ken Griffin, the billionaire founder of the hedge fund behemoth Citadel, has expressed a notably skeptical stance.

He authoritatively stated that generative artificial intelligence has, thus far, failed to become a decisive tool for hedge funds seeking to generate market-beating returns, and its overall impact on the industry remains marginal.

I’ve been building a real-world AI hedge fund.

It's open source so you can learn + build too.

The hedge fund has 6 agents:

1 • market data agent

2 • quant agent

3 • fundamentals agent

4 • sentiment agent

5 • risk manager agent

6 • portoflio manager agentThe hedge fund

— virat (@virattt)

4:54 PM • Dec 21, 2024

Griffin delivered these insights at the prestigious JPMorgan Robin Hood Investors Conference in New York, an event closed to the media, with details provided by anonymous attendees.

Elaborating on his position, Griffin drew a clear distinction between productivity and predictive power. He conceded that "with GenAI there are clearly ways it enhances productivity," likely acknowledging its utility in streamlining code generation, summarizing documents, or automating routine tasks.

However, for the core mission of a hedge fund, "uncovering alpha," or identifying unique investment opportunities that outperform the market, he stated the technology "just falls short."

Citadel's Ken Griffin: AI Will Revolutionize Everything, Except for Investing

"These models are based on what has happened thus far to date in the history of humanity.

And investing is about understanding what's going to unfold tomorrow or next year or two years.

That's not

— Overlap: Business & Tech (@Overlap_Tech)

11:55 PM • May 1, 2025

This critical shortcoming is evidenced by the fact that, as Griffin confirmed, generative AI has not replaced the substantive, deep-dive research conducted by Citadel's teams of analysts and portfolio managers.

This view is not a new development for the financier; he has a history of characterizing AI as a limited instrument for investment analysis and has consistently downplayed the near-term possibility of technology supplanting human roles in such complex, nuanced fields.

Looking at the broader horizon, Griffin projected that generative AI is unlikely to instigate the widespread, revolutionary changes that some prophets foresee. He anticipates the technology will have an impact, but one that is incremental rather than profound.

Furthermore, he predicts this impact will be highly uneven, "disproportionately hitting different sectors," suggesting that industries like content creation or customer service may feel its effects more immediately and intensely than the strategic core of investment management.

Despite his tempered outlook for its use in alpha generation, Griffin did identify a powerful, indirect consequence of the AI boom. He observed that the fervor around AI is "propelling corporate America to invest in technology and elevate their chief technology officers."

In essence, the hype is acting as a catalyst, forcing companies to undertake long-delayed digital transformations and modernize their infrastructure.

This, he noted, is finally resulting in foundational business advancements that, in an ideal world, "should have been made over the past 25 years," representing a significant, if unintended, positive outcome of the current technological moment.

Why Leaders Must Learn, Unlearn, and Relearn for the GenAI Era?

Recall a recent instance where you had to concede you were mistaken about something significant, perhaps a business strategy you advocated for, an employee you vouched for, or a system you were certain would succeed.

That sinking feeling of realizing a change of direction is necessary highlights a universal challenge: the difficult chasm between recognizing the need for change and mustering the will to act.

Amplify that individual hesitation to an organizational scale, and you uncover a primary reason companies falter. Their downfall often isn't a lack of capability or assets, but an inability to abandon the very strategies and beliefs that initially brought them success.

History offers clear examples. General Electric, once America's most valuable corporation, was dropped from the Dow Jones in 2018 after over a century. Its leadership remained tethered to an outdated conglomerate structure and management style, failing to adapt to a new economic reality.

Nearly 700 leading investors, executives and thought leaders gathered at the annual J.P. Morgan / @RobinHoodNYC Investors Conference to explore key topics shaping the investment landscape, including market drivers, labor trends and AI innovation — all in support of a good cause.

— J.P. Morgan (@jpmorgan)

10:11 PM • Oct 16, 2025

Similarly, Intel's decades-long dominance in chip manufacturing blinded it to the mobile revolution. Its fixation on PC processors created a strategic blind spot, allowing rivals like ARM and TSMC to seize the future and costing Intel the smartphone era.

A more recent case is the 2023 collapse of Silicon Valley Bank, partly attributable to leaders who could not modernize their risk management approaches for a new era of rising interest rates. Their adherence to outdated knowledge proved fatal.

"The ability to learn faster than competitors may be the only sustainable competitive advantage."

This concept is now our reality. The useful life of professional skills is contracting, successful business models can become irrelevant suddenly, and the divide between those who adapt and those who stagnate is vast.

The rise of generative AI has intensified this, leaving organizations clinging to pre-AI practices behind those who are reimagining possibilities.

McKinsey research indicates that while 87% of executives see skill gaps in their organizations, a much smaller fraction is taking the essential steps to address them. This requires not just acquiring new knowledge, but proactively unlearning obsolete methods.

The pace of change is not just constant; it is now exponentially faster. Maintaining relevance demands a new paradigm: a commitment to perpetual learning and a tolerance for ambiguity.

The world meets in Abu Dhabi 🇦🇪 for #ADIPEC2025 – where Energy. Intelligence. Impact. define the future.

AI leaders like Arthur Mensch (Mistral AI), Dr Guy Diedrich (Cisco) & Prof. Anima Anandkumar (Caltech) join global energy voices to shape progress.

🎥 Watch the official

— Giuliano Liguori (@ingliguori)

9:14 AM • Oct 12, 2025

Learning, unlearning, and relearning must be seen not as a temporary process, but as a continuous, core function, essential for individuals and organizations to stay valuable to all stakeholders, from employees to customers.

Here are five steps to guide your team through change, reducing anxiety and resistance while capitalizing on the inherent opportunities:

1. Articulate the Purpose Before the Plan

A common leadership failure is enforcing change that feels meaningless. People do not inherently resist change; they resist change that lacks a clear purpose.

They push back when instructed to alter their work without understanding the problem being solved or the future being built. Change initiatives often fail because leaders skip to the what and how before establishing the why.

Teams need to comprehend the underlying purpose and how it benefits them, whether through improved customer satisfaction, a stronger market position, or greater organizational resilience.

Peloton serves as a warning; after its pandemic boom, it failed to articulate a compelling new vision, clung to old assumptions, and lost sight of its core purpose, leading to major financial and operational setbacks.

2. Define the Destination (Even When It's Unclear)

During times of uncertainty, it is vital to provide as much clarity as possible about the future state of the organization. What will new roles look like? What is the new structure? What are the transition goals?

The NeuroLeadership Institute finds that the brain perceives uncertainty as a threat, triggering a similar response to physical pain.

Offering clarity, even if incomplete, reduces this threat. Effective leaders communicate transparently by stating, "Here is what we know, here is what we are still determining, and here is our process for making decisions as we proceed."

This honest approach fosters more trust than projecting false certainty.

3. Acknowledge the Sense of Loss

All change involves loss, and loss necessitates a grieving process. When we ask people to change their workflows, we are often asking them to relinquish hard-earned expertise, valued relationships, and a professional identity tied to the old ways.

Dismissing these emotions is a critical error.

The human response to change parallels the stages of grief. Leaders who recognize and validate these feelings early create an environment for open dialogue and quicker adaptation.

When individuals feel their discomfort is acknowledged, they are more likely to engage with the new vision and focus on potential gains rather than perceived losses. In a post-pandemic climate of collective fatigue, empathy is a strategic necessity, not a soft skill.

4. Empower People as Architects, Not Victims

The immediate questions for anyone facing change are: "How does this affect me?" and "How can I benefit?" The most effective way to foster engagement is to involve people in shaping the change.

Form a transition team, enlist early adopters to demonstrate new methods, and launch pilot programs to refine the approach before a full rollout. For most, tangible proof is more convincing than rhetoric.

This can shift the conversation from "This is impossible" to "How can we make this work?" Microsoft's transformation under Satya Nadella exemplifies this, achieved not by decree but by fostering widespread experimentation.

Adobe's shift to a subscription model succeeded by involving employees at all levels in testing and refinement, making them active participants in the solution.

5. Embed Adaptability Into Your Cultural Foundation

The modern imperative is "change readiness," not just "change management." Cultivating an environment that embraces change and values continuous learning, unlearning, and relearning is critical for competitiveness.

The most successful companies no longer see change as a singular event to be controlled, but as a constant state to be leveraged.

Whether facing external shifts like AI or internal restructuring, the organizations that thrive are those with adaptability woven into their cultural DNA.

As explored in The Courage Gap, success hinges on closing the divide between knowing what must change and having the bravery to act. Adaptation is not a periodic exercise but a daily practice.

Today's leading organizations, such as Netflix, Microsoft, and Adobe, succeeded because they were willing to disrupt their own successful models, letting go of yesterday's achievements to secure tomorrow's relevance.

They understood that the alternative to certainty is not chaos, but possibility.

Are you ready to build that foundation?

Join our exclusive webinar, SOLVING DATA CHALLENGES IN THE AGE OF AI, where we translate the principles of courageous adaptation into a concrete data action plan.

Final Spots Available. Register Instantly!

Yandex Releases Open-Source AI Capable of Early Cerebral Palsy Detection

Yandex B2B Tech, in collaboration with the Yandex School of Data Analysis and St. Petersburg State Pediatric Medical University, has created a groundbreaking AI tool designed to evaluate brain development in infants under one year old.

This neural network automates the analysis of MRI scans, drastically reducing a process that once took days down to just minutes.

This innovative solution interprets MRI data to differentiate between the brain's gray and white matter with more than 90% accuracy.

Yandex has developed the world's first AI solution to assess brain development in infants. It analyzes MRI scans and distinguishes between gray and white matter with over 90% accuracy.

— Yandex (@yandexcom)

12:28 PM • Oct 15, 2025

Accelerating assessment time so significantly that it allows for earlier identification of conditions like cerebral palsy and other central nervous system disorders, enabling clinicians to plan more effective rehabilitation strategies sooner.

Acting as a decision-support system, the tool aids physicians in diagnosing suspected cerebral palsy and determining optimal rehabilitation plans. It is now freely accessible on GitHub for healthcare and clinical research institutions in India to adopt and utilize.

Addressing the Global Burden of Cerebral Palsy

In India, cerebral palsy is a leading cause of childhood disability, with a prevalence of approximately 2–3 cases per 1,000 live births, mirroring global statistics.

Early intervention is crucial for improving long-term outcomes, yet diagnosing cerebral palsy in the first year of life remains a significant challenge in pediatrics.

The infant brain develops rapidly, and conventional MRI scans are difficult to interpret due to the low contrast between gray matter (which forms the cerebral cortex) and white matter (which supports brain function).

While the MRI procedure itself takes 20–40 minutes, the subsequent image analysis and reporting can occupy a skilled radiologist for hours or even days. This workload is compounded in longitudinal studies, where clinicians must review large volumes of follow-up scans.

Leveraging AI to Overcome Data Hurdles

Previous attempts to address this using artificial intelligence, including a notable 2019 machine learning competition, highlighted a major obstacle: a critical shortage of annotated data required to train reliable AI models.

While the competition dataset contained only 15 annotated images, the university's own archives held 1,500 unannotated MRI scans.

To solve this, Yandex researchers partnered with medical experts to manually create new annotations and develop a custom neural network architecture. Through extensive experimentation, they produced a model that achieved over 90% segmentation accuracy on internal evaluations, confirming its potential for clinical application.

The neural network code is available on GitHub and can be integrated into existing medical IT systems.

— Yandex (@yandexcom)

12:28 PM • Oct 15, 2025

Anna Lemyakina, Head of the Yandex Cloud Center for Technologies and Society, stated, "Our mission is to equip doctors with advanced Yandex technologies to enhance diagnostic accuracy, optimize treatments, and accelerate drug development.

While commercial radiology tools exist, none have tackled newborn MRI analysis. Our close collaboration with medical specialists allowed us to create a tool that lets radiologists serve more patients and expedite critical therapy."

Key Benefits for Healthcare Systems

As an open-source solution, this tool offers Indian medical institutions a practical way to advance early cerebral palsy diagnosis.

Integrating it into clinical practice can,

Enhance Diagnostic Precision: The model provides objective, quantitative measurements of brain tissue with high accuracy, outlining structures and calculating tissue ratios.

Enable Faster Intervention: Reducing analysis time to minutes is vital for initiating timely therapy and is exceptionally beneficial for monitoring patient progress over time.

Increase Radiologist Efficiency: By automating a routine and time-consuming task, the tool frees up radiologists to concentrate on complex diagnostic challenges and direct patient interaction.

Furthermore, the solution can serve as a training aid, assisting less experienced clinicians in interpreting the subtle nuances of infant brain MRIs.

The complete code is available on GitHub and can be incorporated into existing healthcare IT infrastructures.

OpenAI Halts Sora Generations of Martin Luther King Jr. Amid Ethical Concerns

OpenAI, the developer behind the advanced Sora video-generation platform, has implemented a temporary ban on creating depictions of the revered civil rights leader Dr. Martin Luther King Jr.

This decisive action came directly at the request of Dr. King's estate, highlighting the growing friction between rapidly advancing artificial intelligence capabilities and the ethical stewardship of a historical legacy.

The company announced the move in a formal statement on the social media platform X (formerly Twitter) on October 17th.

The decision was prompted by emerging reports that certain users had leveraged Sora’s capabilities to generate what OpenAI described as "disrespectful depictions" of Dr. King. In its post, the company stated, “At King, Inc.’s request, OpenAI has paused generations depicting Dr King as it strengthens guardrails for historical figures.”

This pause is not merely a reactive measure but a proactive period for the company to develop and implement more robust technical and ethical safeguards to prevent similar misuse in the future.

This situation touches upon a core debate in the age of generative AI: the balance between technological freedom and respectful representation. OpenAI itself acknowledged the "strong free speech interests" inherent in allowing the portrayal of historical figures.

OpenAI says they are pausing all Sora depictions of Martin Luther King Jr. after people were disrespecting his image

“OpenAI has paused generations depicting Dr. King as it strengthens guardrails for historical figures.”

— ScreenTime (@screentime)

3:48 AM • Oct 17, 2025

Artists, educators, and creators often have legitimate reasons to reference such icons.

However, the company’s statement also articulated a compelling counter-principle, asserting its belief that public figures and their immediate families "should ultimately have control over how their likeness is used." This stance places a significant emphasis on personal consent and dignity, even posthumously.

To institutionalize this principle, OpenAI has created a formal channel for authorized representatives, including estate holders, to request that a specific individual's likeness be excluded from Sora’s “cameo” generation feature.

The company publicly expressed its gratitude to Dr. Bernice A. King, CEO of The King Center and daughter of Martin Luther King Jr., who acted on behalf of the estate. It also credited John Hope Bryant and the AI Ethics Council for facilitating a constructive dialogue on these complex issues.

The incident with Dr. King’s likeness is not an isolated one but part of a much broader, industry-wide ethical confrontation.

Martin Luther King if he was Moroccan

Made by openAI Sora 2

— Marouane Lamharzi Alaoui (@marouane53)

8:17 AM • Oct 2, 2025

As generative AI tools become more sophisticated and accessible, developers are facing increasing scrutiny over issues of digital likeness rights, consent, and the preservation of historical truth.

The case sets a notable precedent for how tech companies might manage the legacies of other historical and public figures, from world leaders and artists to beloved celebrities.

The core question remains: in a digital era where anyone's likeness can be reanimated, who holds the right to control a person's narrative and image after they are gone? The answer, as OpenAI is navigating, lies in a fragile balance between innovation, regulation, and respect.

This balance starts with control over your most critical asset: data.

Ethical AI isn't just about how models are trained; it's about the integrity, governance, and security of the data that powers them. Before an AI can even generate a likeness, it must be built upon a foundation of trusted, well-managed data.

This is where DataManagement.AI comes in.

We provide the essential platform to help your organization master this new landscape. Our solutions ensure that your data, whether used for training generative AI models or safeguarding digital legacies, is governed by robust ethical frameworks, stringent security protocols, and uncompromising quality standards.

Journey Towards AGI

Research and advisory firm guiding industry and their partners to meaningful, high-ROI change on the journey to Artificial General Intelligence.

Know Your Inference Maximising GenAI impact on performance and Efficiency. | Model Context Protocol Connect AI assistants to all enterprise data sources through a single interface. |

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team