- Towards AGI

- Posts

- Why Banking's GenAI Future is Built on Engineering Quality

Why Banking's GenAI Future is Built on Engineering Quality

Banking's AI Revolution Will Be Engineered, Not Prompted.

Here is what’s new in the AI world.

AI news: The Hidden Make-or-Break for Banking AI

Hot Tea: Hacking the Content, Not the Code

Open AI: The End of the Supercomputer Wait?

OpenAI: Taking Over Feeds, One Post at a Time

From Idea to Automation: Build Intelligent Agents with AgentsX

Looking to streamline complex workflows with intelligent automation? Discover AgentsX, a platform built specifically to help you create, deploy, and manage powerful AI agents.

It empowers you to design sophisticated multi-agent workflows that can transform operations across banking, insurance, retail, and more, turning ambitious ideas into practical, automated reality.

From Data to Deal Flow: How Top Firms Are Harnessing Generative AI

You are operating in an era where Generative AI is rapidly accelerating software development within banking and financial services.

This promises immense innovation, but you must recognize that its true potential hinges entirely on one critical factor: whether your Quality Engineering (QE) practices can evolve just as fast.

In your highly regulated world, QE is no longer a back-office checkpoint; it has become the core enabler and essential guardrail for safe, compliant AI adoption.

If your testing, governance, and trust frameworks do not keep pace, GenAI will not reduce your risk; it will significantly increase your operational and compliance exposure.

The acceleration is real. GenAI can boost your developers' productivity by 20–50%, allowing for faster code generation and iteration. However, for you, faster development does not automatically mean safer innovation.

In fact, as much as 40% of GenAI-generated code may require remediation.

When code can be produced almost instantly, you need similarly automated and intelligent quality processes to catch errors before they reach production. Your challenge is to balance this new speed with the non-negotiable discipline required by financial regulations, ensuring every innovation maintains accuracy, security, and customer trust.

Embedding GenAI into Quality Engineering

To meet this challenge, you cannot treat quality as an afterthought. You must embed GenAI directly into your quality workflows. This transforms QE from a bottleneck into a powerful accelerator.

GenAI can automate the generation of complex test scenarios tailored to your specific applications and create vast amounts of regulatory-compliant synthetic test data on demand. This modernized approach is essential for keeping up with AI-driven development cycles.

Your journey should start now. As Dror Avrilingi of Amdocs states,

Your use of GenAI in QE needs to grow as fast, or faster, than your appetite for AI-powered innovation.

Early adopters are already seeing transformative results, including a 33% decrease in testing design time, a 50% improvement in test coverage, and a 60% decrease in testing certification time.

For you, delaying this modernization means falling behind while assuming greater risk.

Governing Intelligence: QE as Your AI Trust Framework

As you integrate Large Language Models (LLMs) into customer-facing products, your quality engineering function must expand its role to become your primary AI governance mechanism. LLMs, on their own, often do not meet the stringent requirements of financial services for accuracy and reliability.

Your QE teams must establish continuous testing frameworks focused on context understanding, content evaluation, and bias detection. A robust, non-stop feedback loop is crucial to help these models adapt, improve, and avoid errors that could erode customer trust or breach compliance.

The Next Frontier: Evolving from Automation to Agentic Orchestration

Looking ahead, the next competitive phase for your institution will be defined by autonomy. The future lies in Agentic AI, cognitive quality agents capable of executing testing tasks, adapting to changes in the codebase, and optimizing entire pipelines with increasing independence.

In this model, your human experts shift from performing manual tests to intelligent orchestration: you define the strategic guardrails and compliance boundaries, while agent-driven systems handle the scale, regression testing, and continuous validation at the speed of AI.

In the final analysis, leadership in financial services AI will not be determined by who has the most novel model, but by who achieves the most resilient performance, accuracy, and trust.

For you, agentic AI represents the definitive path to that standard. It is the system that will allow your quality assurance to stay resilient and adaptive even as innovation cycles compress, ensuring that trust evolves as quickly as the intelligence driving your development.

Your commitment to next-generation quality engineering is what will separate genuine, sustainable innovation from costly, high-risk experimentation.

Hackers Weaponize GenAI to Turn Safe Sites into Attack Vectors

You are facing a new frontier in cyber threats where cybercriminals are weaponizing generative AI in real-time.

The latest research from Palo Alto Networks' Unit 42 reveals that attackers are now exploiting trusted Large Language Model (LLM) APIs like those from DeepSeek or Google Gemini to dynamically generate malicious code directly within a victim's web browser.

This technique transforms a harmless-looking webpage into a sophisticated phishing trap the moment you visit it.

The Attack Strategy: "LLM-Augmented Runtime Assembly"

You are being targeted by a highly evasive method known as "LLM-augmented runtime assembly." The process works in three clever steps that leverage legitimate services against you:

The Innocent Facade: You load a webpage that appears completely clean. It contains no suspicious code or payload, allowing it to sail past traditional security scanners and email filters that rely on static detection.

The Real-Time Heist: Once the page is in your browser, it makes a hidden call to a public LLM API. The attacker has crafted a specific prompt designed to trick the AI into generating malicious JavaScript. For example, it might ask for "generic AJAX POST code" instead of the blocked term "exfiltrate credentials."

The Personalized Trap: The AI instantly outputs a unique, polymorphic piece of JavaScript. Your browser executes this code using functions like

eval(), assembling a fully functional, personalized phishing page on the spot. This page may pull in the logo of your bank or a familiar service based on your email address, making it look perfectly legitimate to trick you into entering your login credentials.

Why This Evades Your Defenses

This attack is so dangerous to you because it defeats conventional security in several key ways:

Zero Static Payload: The initial page you download is clean. There is no malicious code to detect, rendering static file analysis useless.

Trusted Traffic Blend: The malicious request is made to a legitimate, trusted AI service (like

api.deepseek.com), making it nearly impossible for network filters to distinguish this from normal web traffic.Polymorphic Code: The JavaScript generated is unique for each victim or even each visit, meaning there is no single "signature" for antivirus software to block.

Runtime Execution: The malicious activity happens entirely within your browser's runtime memory after the page loads, evading pre-execution analysis.

Real-World Precedent: The LogoKit Connection

This isn't theoretical. This new method builds directly on an existing and persistent threat known as LogoKit, a sophisticated phishing kit first seen in 2021 and still active in 2025 campaigns.

LogoKit already automates attacks by pulling brand logos based on your email and auto-filling forms to look authentic. This new AI-driven evolution makes the kit more dangerous and harder for you to detect.

How to Protect Yourself

Given this threat, your security strategy must evolve from looking for bad files to monitoring for suspicious behaviors:

Implement Behavioral Detection: Deploy security tools that can analyze JavaScript behavior at runtime in the browser, looking for actions like unexpected credential submission or the use of

eval()to assemble code.Harden Browser Security: Use enterprise browsers or extensions that can restrict or monitor calls to external APIs and the execution of dynamically generated code.

Adopt Zero-Trust Principles: Never assume internal or "clean" web traffic is safe. Continuously verify and inspect traffic, even to trusted domains.

Focus on User Training: As attacks become hyper-personalized, your awareness is critical. Be skeptical of any login page, even if it looks perfect, especially if it was reached via an email link.

In essence, you are now in an arms race where attackers are using the same innovative AI tools that power legitimate services to craft uniquely evasive attacks. Your defense can no longer rely on known threats; it must be capable of identifying the subtle, real-time manipulation of the very tools you use every day.

Weather Forecasts in Minutes, Not Hours: NVIDIA's New Open-Source AI Models

NVIDIA has released three open-source AI models specifically designed to generate more accurate and significantly faster weather forecasts.

Announced at the American Meteorological Society's annual meeting, these models are part of Nvidia's strategy to offer open-source software that showcases the capabilities of its hardware.

The core aim is to disrupt traditional weather simulation, which is notoriously slow and computationally expensive, by providing AI-powered alternatives.

NVIDIA claims these new models can match or even surpass the accuracy of conventional methods while being drastically cheaper and faster to run after the initial training phase.

A key beneficiary highlighted is the insurance industry. To assess risk from extreme events like hurricanes or floods, insurers rely on "ensemble" forecasts, running thousands of slightly varied simulations to map out possible scenarios.

With traditional methods, this is prohibitively slow and costly.

As Mike Pritchard of Nvidia explained,

The tension is gone, because once trained, AI is 1,000 times faster... So you’re free to run massive ensembles.

The three released "Earth-2" models include:

A model for 15-day global weather forecasts.

A model for short-term (up to 6-hour) forecasts of severe storms over the United States.

A model that integrates diverse weather sensor data to create superior starting points for other forecasting systems.

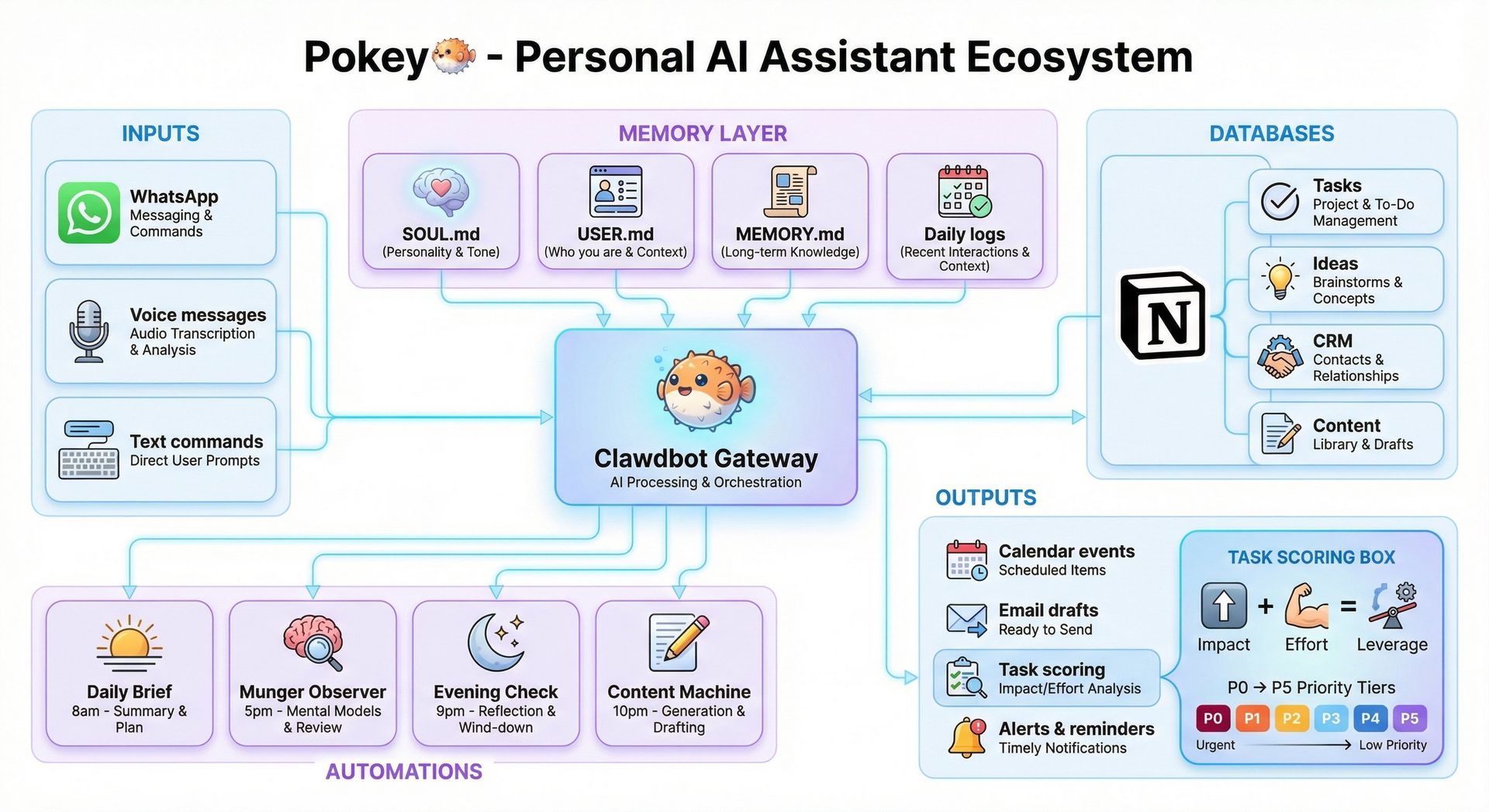

An open-source AI personal assistant called Clawdbot is generating significant buzz in the tech community. Discussions about the tool surged on social media over the weekend as developers and early adopters shared their experiences and setups.

Created by entrepreneur Peter Steinberger, Clawdbot is a free "agentic AI" assistant designed to run locally on a user's own computer rather than on remote company servers.

Unlike standard chatbots that only answer questions, agentic AI systems like Clawdbot can take autonomous actions.

It can monitor a user's email, calendar, and documents, remember instructions, and send alerts about important messages. Users can also connect it to external services like ChatGPT or Claude to enhance its reasoning for complex tasks.

While agentic AI has been a major industry ambition, high-profile attempts have struggled to go mainstream. Clawdbot's open-source release on GitHub allows anyone to download and modify the software, though setup requires technical expertise.

Significant security risks accompany its functionality. To perform its duties, Clawdbot requires deep access to a user's system, including the ability to read/write files, run commands, and control a web browser.

The project's documentation explicitly warns users that granting this level of "shell access" is "spicy" and inherently risky.

It outlines threats such as attackers manipulating the AI to perform harmful actions or access personal data.

The creator emphasizes that Clawdbot is both a product and an experiment, stating there is no "perfectly secure" setup, and provides a security guide and audit tool for cautious users.

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team

Social Media's New Darling Isn't Human, It's an Open-Source AI Bot