- Towards AGI

- Posts

- What Goldman’s AI Deployment Reveals About the Future of Banking?

What Goldman’s AI Deployment Reveals About the Future of Banking?

Reasoning Embedded in Infrastructure

Here is what’s new in the AI world.

AI news: Goldman Sachs Embeds Claude for Accounting & Compliance

Hot Tea: Agentic AI Targets $450B in Pharma Marketing

Open AI: Meta Patents AI to Simulate Users After Death

OpenAI: Mark Cuban: AI Wealth Will Be Built in Integration, Not Infrastructure

OpenAI: Google’s WebMCP forces you to rethink your infrastructure

Goldman Sachs Deploys Claude-Based Agents to Automate Accounting and Compliance Workflows

Goldman Sachs has moved beyond experimentation and embedded Anthropic’s Claude into production-grade financial workflows, specifically trade accounting and client onboarding, two domains that are structured in theory but exception-heavy in practice.

Over the past six months, Anthropic engineers have worked alongside Goldman’s internal teams to co-develop autonomous agents capable of operating across heterogeneous systems, ingesting transactional records, reconciling ledgers, parsing compliance documents, and generating audit-ready outputs.

At a systems level, these agents operate across heterogeneous data environments.

In trade accounting, Claude-based workflows ingest transactional records from multiple internal systems, normalize and reconcile entries across ledgers, identify breaks between counterparties, and apply accounting treatments based on predefined policy hierarchies.

Unlike deterministic reconciliation engines that depend on static rule trees, these Claude-based agents introduce multistep reasoning.

They evaluate ambiguous metadata, inconsistent timestamps, or partial counterparty records and produce structured rationales for resolution or escalation. The output is not free-form text;it is constrained, policy-aligned artifacts suitable for downstream financial controls.

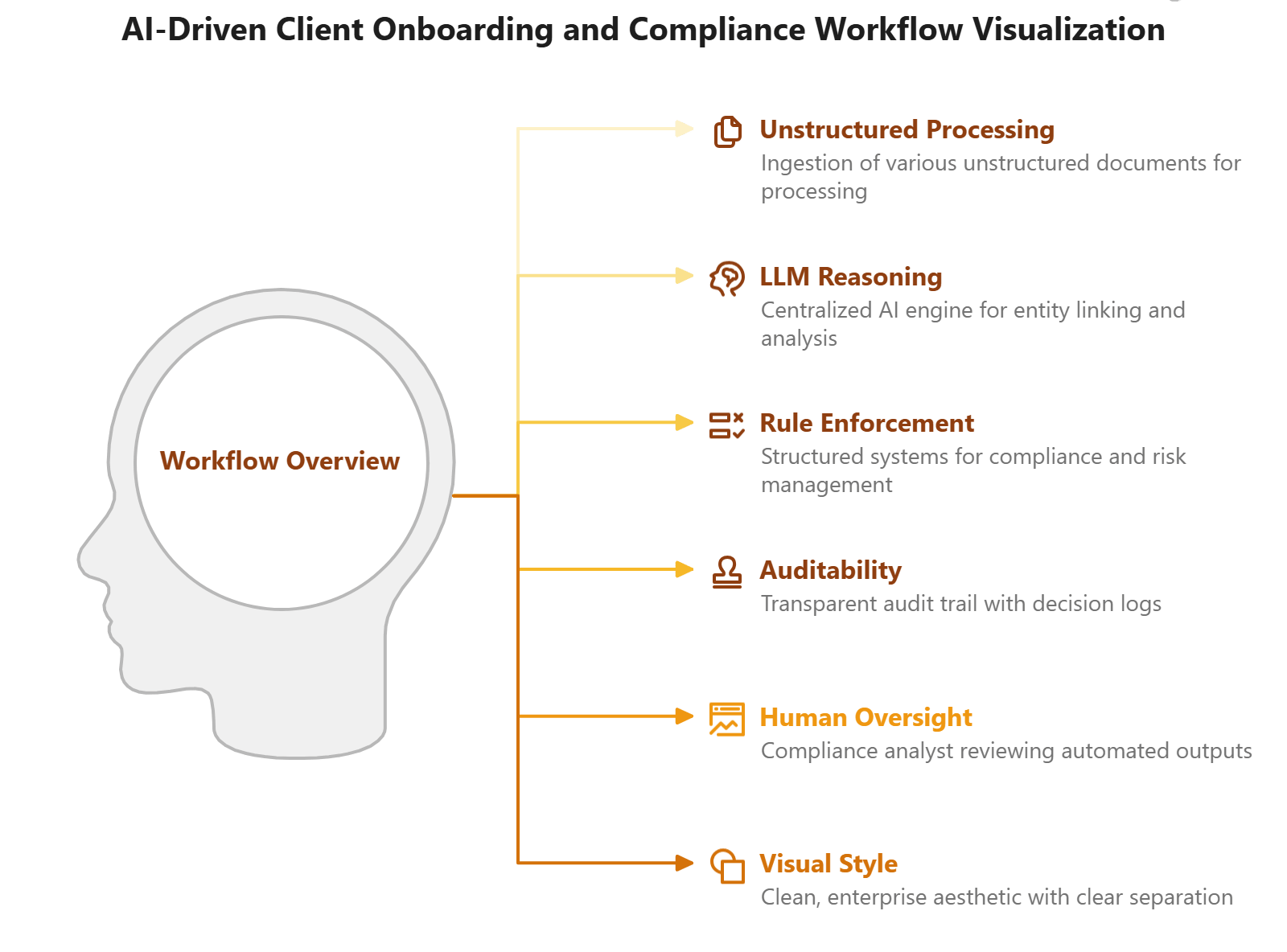

In client onboarding and compliance, the architecture becomes more complex.

The agents parse unstructured documents like corporate filings, beneficial ownership disclosures, identification records, while simultaneously cross-referencing structured datasets such as sanctions lists, AML flags, and internal risk scoring models.

The system synthesizes findings into traceable compliance summaries, maintaining reasoning chains to preserve auditability.

This resembles a hybrid stack: LLM-based reasoning layered atop deterministic rule enforcement, API-driven validations, and domain-specific constraints.

The significance is not the base model. It is the orchestration layer.

Claude is operating as a stateful agent within bounded task environments like sequencing actions, invoking internal tools, persisting context across long document sets, and adhering to financial governance standards.

In other words, Goldman is not deploying a copilot; it is embedding reasoning systems inside financial control infrastructure.

Early internal indicators reportedly show onboarding timelines reduced by 30% and exception queues in trade accounting projected to drop by as much as 80%.

But, the real takeaway is this: AI is now capable of automating ambiguity inside regulated workflows.

For you, the implication is technical, not philosophical.

Inside your organization, there are exception-heavy workflows where deterministic systems stall and humans step in to resolve ambiguity.

Those intervention layers are not edge cases, they are structural friction points.

If reasoning agents can be embedded with guardrails, controlled tool access, and audit constraints, those workflows become candidates for redesign rather than incremental optimization.

The advantage will not accrue to those experimenting with chat interfaces at the periphery.

It will accrue to those who re-architect operational pipelines around stateful AI agents capable of handling both deterministic logic and contextual variance within production systems.

Agentic AI Enters Pharma Marketing: A $450B Autonomous Execution Opportunity

Agentic AI is no longer confined to conversational copilots.

It is becoming a distributed execution layer inside pharmaceutical commercial operations, and you are likely sitting on the exact fragmentation that makes this shift economically decisive.

Industry projections estimate up to $450 billion in global value by 2028, but that upside does not come from better content generation.

It comes from autonomous orchestration across disconnected systems that already exist in your stack.

Your commercial infrastructure is inherently heterogeneous.

CRM platforms like Veeva, syndicated claims datasets, event management systems, medical content repositories, and compliance tracking tools all operate on distinct schemas, access controls, and refresh cycles.

Extracting cross-system insight has historically required manual SQL pulls, ad hoc dashboards, or custom ETL pipelines that are brittle and slow to adapt.

Agentic AI introduces a reasoning layer on top of that fragmentation.

Instead of building a new pipeline for every analytical question, an agent decomposes a commercial objective into structured sub-tasks.

If prescription share declines after competitor congress exposure, the agent can query prescribing deltas from claims data, cross-reference attendance logs, retrieve historical CRM interactions, evaluate engagement metrics, and synthesize the results into an intelligence brief with a prioritized outreach plan.

For you, the benefit is operational compression.

What previously required analysts coordinating across data engineering, marketing ops, and compliance can be executed as task-directed reasoning with state persistence across steps. Thus, decision latency drops.

Campaign adjustments also become continuous rather than quarterly. And, your commercial organization shifts from reactive reporting to adaptive execution.

This architecture is inherently multi-agent.

A retrieval agent handles controlled CRM and claims access.

A compliance agent validates messaging against regulatory guardrails.

A planning agent decomposes strategy into sequenced actions.

A monitoring agent ingests downstream engagement signals and updates HCP state representations in near real time.

Engagement outcomes feed back into the system, refining subsequent recommendations.

That closed loop is what transitions marketing from periodic campaign blasts to signal-driven orchestration.

This is precisely the type of architecture AgentsX was designed to operationalize at scale.

Rather than forcing a single monolithic model to manage retrieval, compliance, planning, and monitoring simultaneously, AgentsX enables you to deploy a coordinated multi-agent system under a unified orchestration layer.

Each agent is specialized and domain-aligned, while routing mechanisms dynamically assign sub-tasks based on context and expertise.

The result for you is not incremental productivity, it is structural leverage.

You gain a system capable of reasoning across fragmented data environments, operating within regulatory guardrails, and continuously adapting commercial execution without rebuilding pipelines for every new objective.

Meta Patents AI System to Simulate Users Post-Mortem

Meta has been granted a patent describing a system that uses large language models to simulate a user’s behavior on its social platforms, even after death.

The filing outlines an architecture in which an LLM is trained on “user-specific data,” including historical posts, comments, likes, direct messages, and engagement patterns, to replicate behavioral signatures across Meta’s ecosystem.

The system could autonomously generate responses, interact with new content, and potentially simulate conversational exchanges via text, audio, or even video.

This is not a generic chatbot layered onto a dormant account. The patent suggests a fine-tuned behavioral model constructed from longitudinal activity data.

That implies embedding user interaction history into vector representations, modeling stylistic markers (syntax, sentiment distribution, emoji usage, response latency patterns), and conditioning outputs on contextual signals from new incoming content.

The system would perform behavioral style transfer at an account scale, maintaining continuity between past activity and future autonomous interactions.

The patent also references simulation during temporary absence, not solely death.

That dual framing is significant. A system designed to preserve engagement during digital inactivity, whether due to hiatus or mortality, would require integration with Meta’s recommendation engines, messaging infrastructure, and content ranking systems.

It would need guardrails around impersonation, disclosure labeling, and user consent mechanisms to avoid misleading other users. The training loop would likely incorporate reinforcement learning from engagement metrics, optimizing for authenticity proxies such as response similarity and interaction retention.

This positions the technology within a broader category sometimes called “grief tech” or “digital afterlife systems,” where AI models are trained on a deceased person’s digital footprint to create interactive simulations.

Microsoft has previously patented similar chatbot systems, and startups like Replika and You, Only Virtual have explored adjacent implementations.

What distinguishes Meta’s approach is platform-scale data depth: years of multimodal behavioral signals across Facebook, Instagram, WhatsApp, and Messenger.

However, the deployment complexity extends beyond model fidelity.

Post-mortem simulation intersects with data ownership, consent frameworks, right-to-be-forgotten statutes, and jurisdiction-specific privacy law. A system autonomously generating content in a deceased person’s voice would require explicit pre-death authorization protocols, identity verification layers, and post-mortem governance structures, none of which are trivial at a global scale.

Meta has publicly stated it has no plans to implement the patented system. But the technical direction is clear: generative models are now capable of reconstructing persistent digital personas from historical behavioral data.

Mark Cuban: The Real AI Wealth Is in Integration, Not Infrastructure

Mark Cuban’s thesis cuts against the current wave of model-building and agent-infrastructure hype: the next durable AI fortunes will not be made training trillion-parameter systems, but by integrating existing models into the operational core of real businesses.

In his framing, the competitive advantage lies in the “last mile,” where APIs meet accounting systems, CRMs, underwriting workflows, logistics platforms, and messy spreadsheets that actually run companies.

Technically, this is a shift from model innovation to systems engineering. Foundation models are increasingly commoditized through APIs.

What remains scarce is the ability to map those models onto fragmented workflows, reconcile them with legacy databases, and embed them into decision loops with measurable ROI.

The AI integrator, in Cuban’s view, is less a researcher and more an applied architect, someone who can decompose a business bottleneck into automatable subtasks, layer retrieval and reasoning on top of proprietary data, and redesign the workflow so AI acts as a throughput multiplier rather than a novelty layer.

His focus on small-to-medium businesses (SMBs) is strategic.

Large enterprises already operate with structured IT governance, vendor contracts, and internal AI roadmaps. SMBs, by contrast, often run on manual processes, email chains, and disconnected SaaS tools.

That fragmentation is not a liability, it’s opportunity surface area.

An integrator can deploy retrieval-augmented generation against internal documents, automate invoice reconciliation with LLM-based classification, or implement predictive analytics on transactional data without navigating multi-year procurement cycles.

Crucially, AI is not positioned as an autonomous replacement for domain expertise. It is a force multiplier.

A logistics operator with deep routing knowledge can use AI to simulate delivery optimizations.

A healthcare administrator can automate claims classification.

A reinsurance analyst can model risk exposure across historical loss data faster than any manual actuarial review.

Thus, the model does not create expertise, it amplifies it.

This is where sector-specific integration becomes decisive.

In insurance and reinsurance, for example, the value is not in building another large model, but in embedding AI directly into underwriting, claims triage, and risk modeling pipelines.

Platforms like Reinsured.AI exemplify this integration-first approach: applying AI to structured risk data, historical claims records, and treaty documentation to enhance pricing accuracy, automate document interpretation, and improve portfolio-level exposure analysis.

The advantage comes from aligning models with domain-specific data structures and regulatory constraints, not from reinventing the model layer itself.

Cuban’s broader point is pragmatic. The infrastructure race will produce winners, but the distributed wealth will emerge from those who can operationalize AI inside real businesses.

Google’s WebMCP Signals the Shift to a Programmable, Agent-Executable Web

AI agents are beginning to execute transactions by booking flights, completing purchases, submitting support tickets, rather than merely generating responses. Google’s introduction of WebMCP signals a shift in how the open web must adapt to this behavioral change.

Most agents now interact with your website through brittle simulation: DOM parsing, button detection, sequential form filling, and heuristic navigation.

This “screen-scraping cognition” is error-prone, latency-heavy, and operationally fragile. Minor UI changes break flows. Ambiguous labels cause failed transactions, and agents guess intent from presentation rather than interfacing with structured capability.

WebMCP proposes a different abstraction layer.

Instead of forcing agents to infer actions from layout, you explicitly expose structured tools, machine-readable definitions of permissible operations such as “search product,” “initiate checkout,” “book flight,” or “submit ticket.”

This creates a deterministic action surface layered on top of your existing stack.

This reduces ambiguity by shifting interaction from visual inference to schema-defined execution.

You define parameters, validation constraints, authentication scopes, and expected outputs.

Agents operate against that contract, increasing precision and lowering failure rates. So, latency automatically improves because multi-step UI traversal collapses into single structured calls.

This is not a UX enhancement; it is a distribution architecture decision for you.

As AI agents begin mediating user intent at scale, a growing share of your traffic will originate from autonomous systems rather than human browsers.

Thus, the critical question is whether your infrastructure exposes structured, machine-actionable endpoints or whether agents must reverse-engineer your frontend, introducing failure points you do not control.

The measurable upside is operational: higher task completion rates across your flows, fewer transaction failures in your checkout or booking systems, cleaner data capture inside your stack, and more reliable attribution because actions are invoked through defined interfaces rather than brittle UI simulation.

The ecosystem is fragmenting across protocols such as Model Context Protocol from Anthropic and emerging frameworks from Amazon, alongside WebMCP. Interoperability will directly affect your integration costs.

Thus, you should treat agent compatibility as core infrastructure.

Define which of your capabilities are exposed, under what constraints, and with what validation logic.

If you operationalize structured agent interfaces now, you position your systems to capture agent-mediated demand with higher reliability and lower execution risk.

Journey Towards AGI

Research and advisory firm guiding on the journey to Artificial General Intelligence

Know Your Inference Maximising GenAI impact on performance and Efficiency. | FREE! AI Consultation Connect with us, and get end-to-end guidance on AI implementation. |

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team