- Towards AGI

- Posts

- The Genius Behind Sustainable AI Systems

The Genius Behind Sustainable AI Systems

How the Best Minds are Making AI Lighter and Smarter

Here is what’s new in the AI world.

AI news: The Minds Behind Green AI

Hot Tea: Netflix's Aggressive Generative AI Push

Open AI: The First Complete Open AI Video Model

OpenAI: OpenAI's Data Residency Push

The Conscience of Code: The Thinkers Ensuring AI Serves the Planet

Imagine using your kitchen to explore the fundamental principles of materials science. This is exactly what you do when you ponder how to build the perfect gingerbread house.

You start by questioning what variable would make the most dramatic difference in the structural integrity of the gingerbread cookies themselves.

Your curiosity leads you to focus on butter. You know that butter contains water, which turns to steam at high baking temperatures, creating air pockets.

You hypothesize that decreasing the amount of butter will yield a denser, stronger gingerbread, capable of holding together as a house.

This kitchen experiment is a perfect, edible example of a core scientific concept: how changing the structure of a material directly influences its properties and performance.

From Your Kitchen to Revolutionary AI Research

This same curiosity, understanding how a material's structure dictates its function, drives your groundbreaking research at MIT on a much larger problem: the massive energy cost of artificial intelligence.

You are developing new materials and devices for neuromorphic computing, a field that mimics the brain's incredible efficiency.

Your focus is on electrochemical ionic synapses. These are tiny devices you can "tune" to adjust their conductivity, much like the connections between neurons in your brain strengthen or weaken as you learn.

The core of your work addresses a critical issue: training large AI models consumes a colossal amount of energy, far more than the human brain uses to learn.

As your advisor explains, the brain's efficiency comes from processing and storing information in the same place, the synapses. Your devices aim to replicate this, eliminating the need to constantly shuffle data back and forth, which is a major energy drain in traditional computing.

Your research is, in essence, electrochemistry for brain-inspired computing, a novel step toward solving one of AI's biggest sustainability challenges.

Your Scientific Roots and Interdisciplinary Approach

Your path to this work began early. Growing up with a marine biologist mother and an electrical engineer father, science was simply how you understood the world.

You saw firsthand how your mother used science to study dolphin populations affected by pollution, demonstrating that science is a tool not just for understanding the world, but for improving it.

This interdisciplinary mindset is crucial in your current work. You aren't just working in one field; you're bridging the gap between electrochemistry and semiconductor physics.

AI is transforming materials discovery into an engineering problem, predicting alloys, batteries, and compounds before they’re ever made. bit.ly/3Lrnaj5

— Interesting Engineering (@IntEngineering)

11:30 AM • Oct 24, 2025

When you started, no one had used magnesium ions in these kinds of devices before, so you had to learn the language and norms of two different scientific communities and act as a translator between them.

Your biggest challenge is one all scientists face: making sense of messy data and ensuring your interpretation is correct.

You overcome this through close collaboration with colleagues in neuroscience and electrical engineering, and by making small, iterative changes to your experiments to see what happens next.

For those fascinated by this intersection, TowardsMCP is your essential resource. It’s a community and publication dedicated to exploring and developing the Model Context Protocol (MCP), a groundbreaking framework for connecting AI models to tools, data, and context.

At TowardsMCP, you'll find:

In-depth tutorials and research on building and connecting cognitive systems.

Insights from a community of engineers and researchers breaking new ground.

A shared commitment to open, iterative development.

If you're building the next generation of intelligent systems, this is where you can follow, contribute, and collaborate.

Building Community as a Core Scientific Practice

For you, science isn't done in isolation. You actively build community through outreach, explaining complex concepts to diverse audiences.

Whether you're leading a booth for kids, using cabbage juice as a pH indicator, or presenting at an international conference, you always start with the same question:

"Where is my audience starting from?" This ability to communicate is not just a side activity; it's central to spreading ideas, gaining new perspectives, and staying inspired.

This skill to connect and explain will serve you perfectly in your goal to work in academia, where you can inspire the next generation of scientists and engineers.

You are not just a brilliant researcher; you are persistent, resilient, and understand that building a supportive community is a vital part of making groundbreaking discoveries.

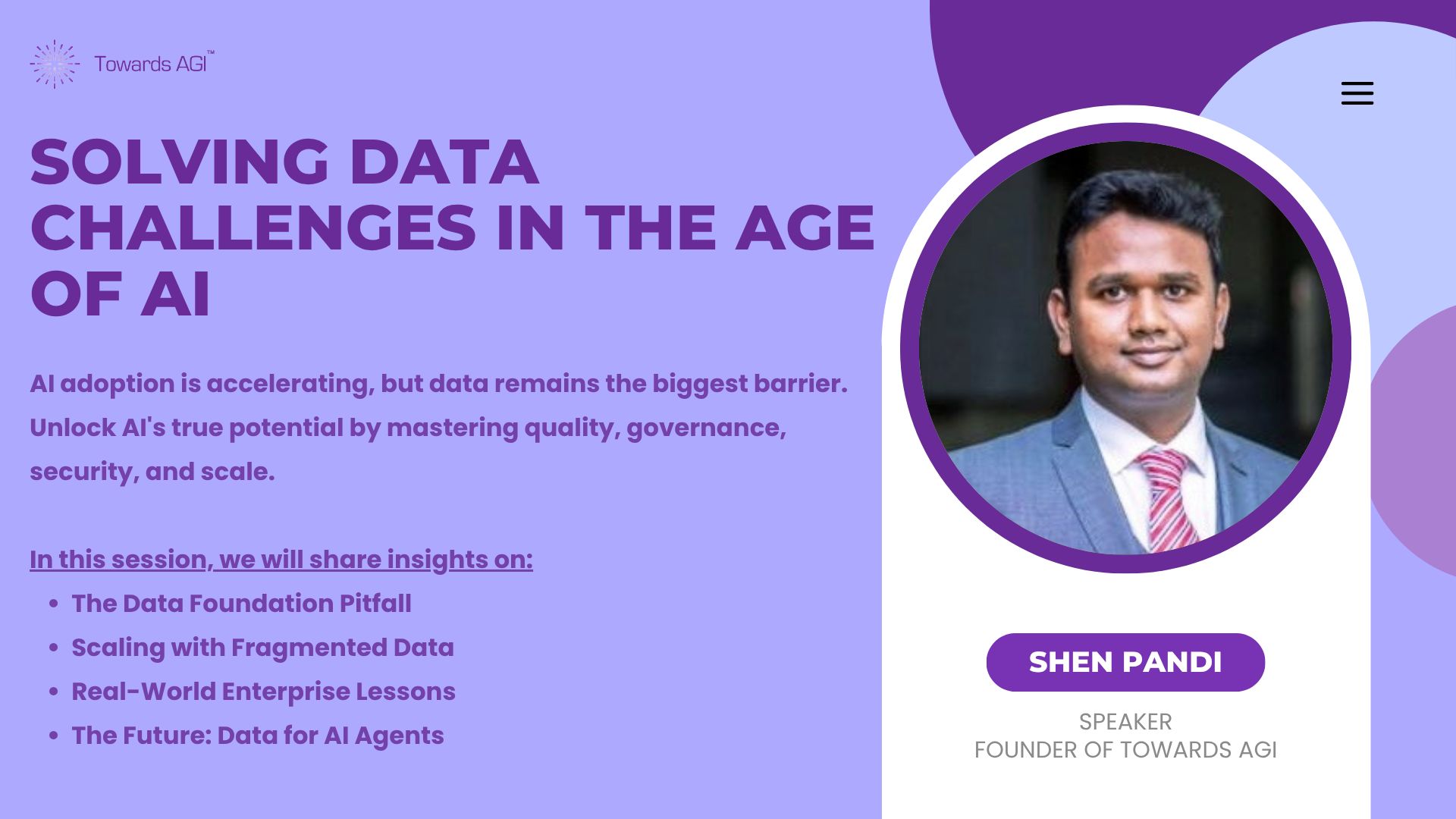

That’s why I’m excited to share this essential session from Towards AGI: "Solving Data Challenges in the Age of AI."

Only a few spots left. Hurry up!

While we often focus on the latest AI models and algorithms, the real barrier to progress isn't the intelligence we code, but the data we use to build it.

This session cuts to the heart of the matter, addressing the critical pillars of quality, governance, security, and scale that form the bedrock of any successful AI endeavor.

Netflix Doubles Down on Generative AI While Rivals Hesitate

While the entertainment industry grapples with the role of generative AI, Netflix is making its position clear: it is fully leaning into the technology as a tool to enhance, not replace, human creativity.

In its recent quarterly earnings report, Netflix told investors it is “very well positioned to effectively leverage ongoing advances in AI.”

The company’s vision, as explained by CEO Ted Sarandos, is that AI will serve as a powerful tool to make creatives more efficient, but it cannot substitute for fundamental storytelling talent.

“It takes a great artist to make something great,” Sarandos stated. “AI can give creatives better tools to enhance their overall TV/movie experience for our members, but it doesn’t automatically make you a great storyteller if you’re not.”

Focused, Practical Applications Over Novelty

Netflix is already deploying generative AI in specific, practical ways behind the scenes. Recent examples include:

Creating a building collapse scene in the Argentine show “The Eternaut.”

De-aging characters in the opening scene of “Happy Gilmore 2.”

Helping producers of “Billionaires’ Bunker” visualize wardrobe and set design during pre-production.

We’re confident that AI is going to help us and help our creative partners tell stories better, faster, and in new ways.

This embrace of AI comes amid significant contention in Hollywood, where artists and actors worry about job displacement and the use of their work in AI training data without consent.

Recent debates intensified with OpenAI’s release of its Sora 2 model, which lacked guardrails against generating deepfakes of actors, prompting concerns from SAG-AFTRA and stars like Bryan Cranston.

Netflix is going all in on AI.

From production to ads, AI is now part of everything at Netflix:

- GenAI tools for creative workflows like de aging actors and designing sets

- AI driven ad targeting and conversational search

- AI systems powering recommendations and localization— MD Fazal Mustafa (@the_mdfazal)

12:44 PM • Oct 24, 2025

Netflix's current approach suggests a focus on using AI for special effects and pre-production tasks rather than replacing performers. However, this still raises concerns about the potential impact on visual effects jobs.

When asked about the impact of Sora, Sarandos acknowledged that content creators could be affected but expressed less concern for the core movie and TV business, telling investors, “We’re not worried about AI replacing creativity.”

The announcement came alongside Netflix's quarterly results, which showed a 17% year-over-year revenue increase to $11.5 billion, though this figure fell slightly short of the company's own forecast.

Lightricks' LTX-2: Open-Source AI Video is Here

Lightricks, the company known for its Facetune app and a leader in AI-powered content creation tools, has officially launched LTX-2.

This new model represents a significant generational leap in creative artificial intelligence, functioning as an open-source foundation that combines professional-grade video and synchronized audio generation within a single, unified system.

Designed for practical use in real-world production environments, LTX-2 is built to empower a wide range of creators, from independent artists to large enterprise teams.

Today we’re launching LTX-2 in LTX (we're more than a studio).

LTX-2 represents a major breakthrough in speed and quality.

It offers fast generation, native 4K, lipsync, high frame rate, and up to 10s of continuous video.

Here’s what you need to know 🧵

— LTX (@LTXStudio)

2:47 PM • Oct 23, 2025

The model distinguishes itself through a combination of high-end performance and remarkable efficiency. It is capable of producing video in native 4K resolution at up to 50 frames per second, complete with synchronized audio, for clips lasting up to 10 seconds.

A key advantage is its operational efficiency; Lightricks states that LTX-2 can deliver these professional-grade outputs at up to 50% lower computational cost than competing models.

Furthermore, its ability to run effectively on consumer-grade hardware democratizes access to high-end AI video creation, eliminating the absolute need for expensive enterprise-level infrastructure.

Technologically, LTX-2 integrates several advanced features. Its most notable innovation is the ability to generate visuals and synchronized audio in a single, cohesive process, ensuring that motion, dialogue, and music are naturally aligned.

It also provides creators with extensive control through features like multi-keyframe conditioning and support for various inputs, including text, images, and depth maps.

Video generated using LTX-2.

Adhering to an open-source philosophy, Lightricks is releasing the model's core components on GitHub, with full model weights scheduled for release later this fall to encourage community collaboration and innovation.

The model is being made accessible through a tiered pricing structure via its API, which is being rolled out to early partners. The tiers are designed for different use cases: a "Fast" option for quick ideation, a "Pro" tier for daily production work, and an "Ultra" tier for maximum cinematic fidelity.

LTX-2 will also be integrated into the existing LTX platform and available through third-party services, solidifying its role as a comprehensive and accessible creative engine for the future of digital content creation.

OpenAI's Data Residency Meets Enterprise Demands

For Chief Data and Information Officers in regulated industries, data governance, specifically the issue of data sovereignty, which dictates where data is stored and processed, has been the single biggest roadblock to adopting enterprise AI.

This concern has either forced companies into complex private cloud setups or caused them to abandon AI initiatives altogether.

OpenAI's recent announcement that it will offer UK data residency marks a pivotal shift. It demonstrates that major AI providers are now adapting their infrastructure to meet the stringent data protection requirements of enterprise and public sector clients.

By directly addressing the primary governance concern in the market, this move is set to accelerate AI adoption, moving it beyond isolated pilot projects and into core business operations.

From Public Sector Pilot to Full-Scale Deployment

Starting October 24, the new data residency option will apply to OpenAI's core business products: the API Platform, ChatGPT Enterprise, and ChatGPT Edu. This allows UK clients to keep their data within the country, simplifying compliance with local data protection laws like the UK GDPR.

The UK Ministry of Justice (MoJ) is the flagship client for this new offering. They have signed a deal to provide 2,500 civil servants with access to ChatGPT Enterprise, a full deployment following a successful trial.

OpenAI announces it will store business customer data in the UK for the first time.

Watch @DavidLammy and @OpenAI's Chief Commercial Officer discuss how AI can revolutionise government services and strengthen our economy 👇— Ministry of Justice (@MoJGovUK)

11:10 AM • Oct 24, 2025

The MoJ reported that the tool saved employees significant time on routine tasks such as drafting, legal research, compliance checks, and document analysis.

This real-world application in a sensitive government department provides a powerful, validated blueprint for other regulated sectors like finance and healthcare- to measure potential productivity gains from deploying AI on complex, knowledge-based work.

This announcement highlights two distinct strategic paths for OpenAI in the UK. The new data residency option is an immediate solution for data governance, separate from the long-term "Stargate UK" project with NVIDIA, which focuses on building sovereign AI capabilities through local computing infrastructure.

For IT leaders, this complicates an already crowded AI platform market. Previously, companies seeking to use OpenAI's models within a specific jurisdiction were typically directed to platforms like Microsoft's Azure AI, which bundles model access with built-in governance. Now, they face a more nuanced decision:

Direct Access: Go straight to OpenAI for the latest features and guaranteed UK data residency.

Cloud Platforms: Continue using established platforms like Azure AI, AWS Bedrock, or Google Vertex AI, which may offer deeper integration with existing data and enterprise applications.

Business Software: Consider integrated AI within platforms like IBM WatsonX or SAP Joule, which prioritize data privacy and seamless workflow integration.

Strategic Implications for Enterprise Leaders

OpenAI's strategic pivot from a US-centric model to offering local data options demands a fresh assessment from business leaders. Key considerations include:

Re-evaluate Governance Blockers: CISOs and Data Protection Officers should immediately reassess any risk analyses that previously barred the use of OpenAI tools due to data residency. This change may now greenlight stalled AI projects.

Leverage Government Validation: The MoJ's successful deployment provides a compelling business case. CIOs in other sectors can now point to a government precedent when advocating for investment in AI for document analysis and other complex tasks.

Analyze Total Cost of Ownership: CTOs must compare the total cost of working directly with OpenAI, factoring in API prices, integration, security, and compliance, against the cost of using a bundled cloud platform.

Prepare for Sovereign AI: This is part of a broader trend. The Stargate UK project signals that sovereign AI, where models and data are managed on local infrastructure for regulatory and security reasons, is a long-term strategic goal.

The question for enterprise leaders is no longer if they can use powerful AI tools securely, but how to best integrate, manage, and scale them to achieve tangible business outcomes.

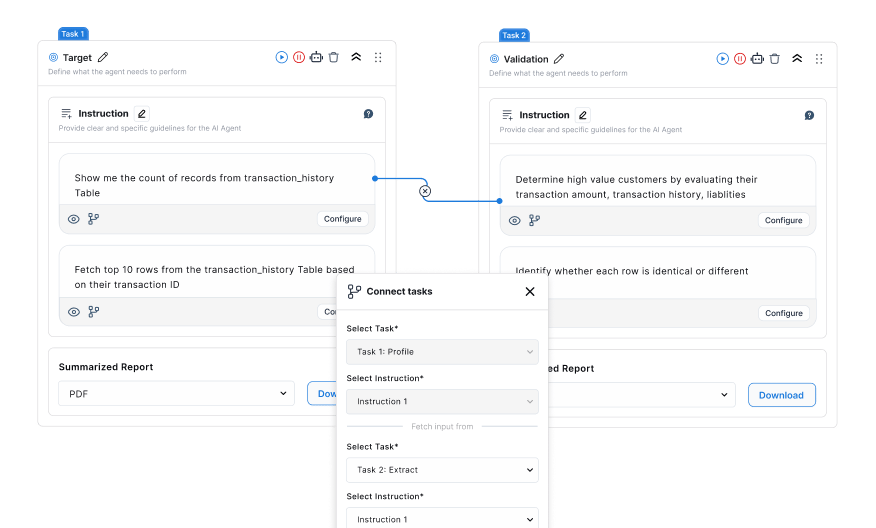

For any organization ready to move from experimentation to execution, the answer is DataManagement.AI.

DataManagement.AI provides the enterprise-grade platform you need to:

Integrate AI securely across your entire data landscape.

Manage models, data streams, and governance from a single pane of glass.

Scale your AI initiatives with confidence, turning data into measurable business value.

Stop wrestling with the "how." Start building on a foundation designed for the future of enterprise AI.

Journey Towards AGI

Research and advisory firm guiding industry and their partners to meaningful, high-ROI change on the journey to Artificial General Intelligence.

Know Your Inference Maximising GenAI impact on performance and Efficiency. | Model Context Protocol Connect AI assistants to all enterprise data sources through a single interface. |

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team