- Towards AGI

- Posts

- Outgunned By Big Banks On AI? Here's Your Asymmetric Strategy

Outgunned By Big Banks On AI? Here's Your Asymmetric Strategy

No AI Team? No Problem.

Here is what’s new in the AI world.

AI news: The GenAI Playbook for Community and Regional Banks

Hot Tea: Is the Creator-GenAI Party Ending in 2026?

Open AI: The Open-Source Path to AGI?

OpenAI: A Stressful Job for $555K

Gen Matrix Q4 Launch: Honouring the Top 100 in Generative AI

Is Your Bank Losing the AI Race? Here’s a Faster Path to Catch Up

You are likely aware that businesses, including banks, are racing to integrate AI and generative AI into their workflows.

Recent data shows 54% of financial institutions have implemented or are implementing GenAI, but a closer look reveals a disparity: while about three-quarters of large banks are doing so, only around 40% of smaller banks (under $10B in assets) are.

This matters because community and regional banks are vital to their communities. To stay competitive and strengthen your capabilities against larger rivals, your institution needs to embrace GenAI to level the playing field.

What You Need to Know:

Implementing GenAI doesn’t have to be daunting or prohibitively expensive.

The most impactful AI isn't flashy; it’s embedded in operations to make your employees and clients smarter and faster.

Starting with small, focused AI experiments can unlock significant results.

Tackle Your Misconceptions and Start Small

Many smaller banks face common pitfalls. Some rush in without a governance plan, while others hesitate to start, fearing they’ll get it wrong or don't know where to begin.

You don’t need to jump straight to a grand, customer-facing application like a client chatbot. An "all or nothing" mindset can stall your progress. Instead, your first step shouldn't be a major undertaking.

Start by asking:

Which departments and processes in your bank could benefit from AI?

How can your employees begin familiarising themselves with the technology?

Where can you streamline repetitive tasks?

Which teams could test AI for research and report insights?

Don't Hesitate to Use Existing Solutions

Your employees are probably already using platforms like Google and Microsoft, which have heavily invested in GenAI tools. Simply encouraging your team to experiment with these readily available solutions is a great, low-cost first step.

Having an AI-familiar workforce that can streamline labour-intensive processes is at least as impactful as launching a flashy customer tool, and far less expensive.

An Example: Using AI to Solve Problems

Consider back-office tasks. At Grasshopper Bank, our lending team used GenAI to automate follow-ups on missing documents, reducing a 2-3 hour process per loan to 2-3 minutes.

We also use it in auto lending, where AI verifies driver’s license information (KYC details) over weekends when applications spike.

This speeds up the entire process, eliminates weekend holdups, and allows your team to handle a higher volume, all while maintaining human checkpoints for quality control.

How to Find the Right Balance of AI and Human

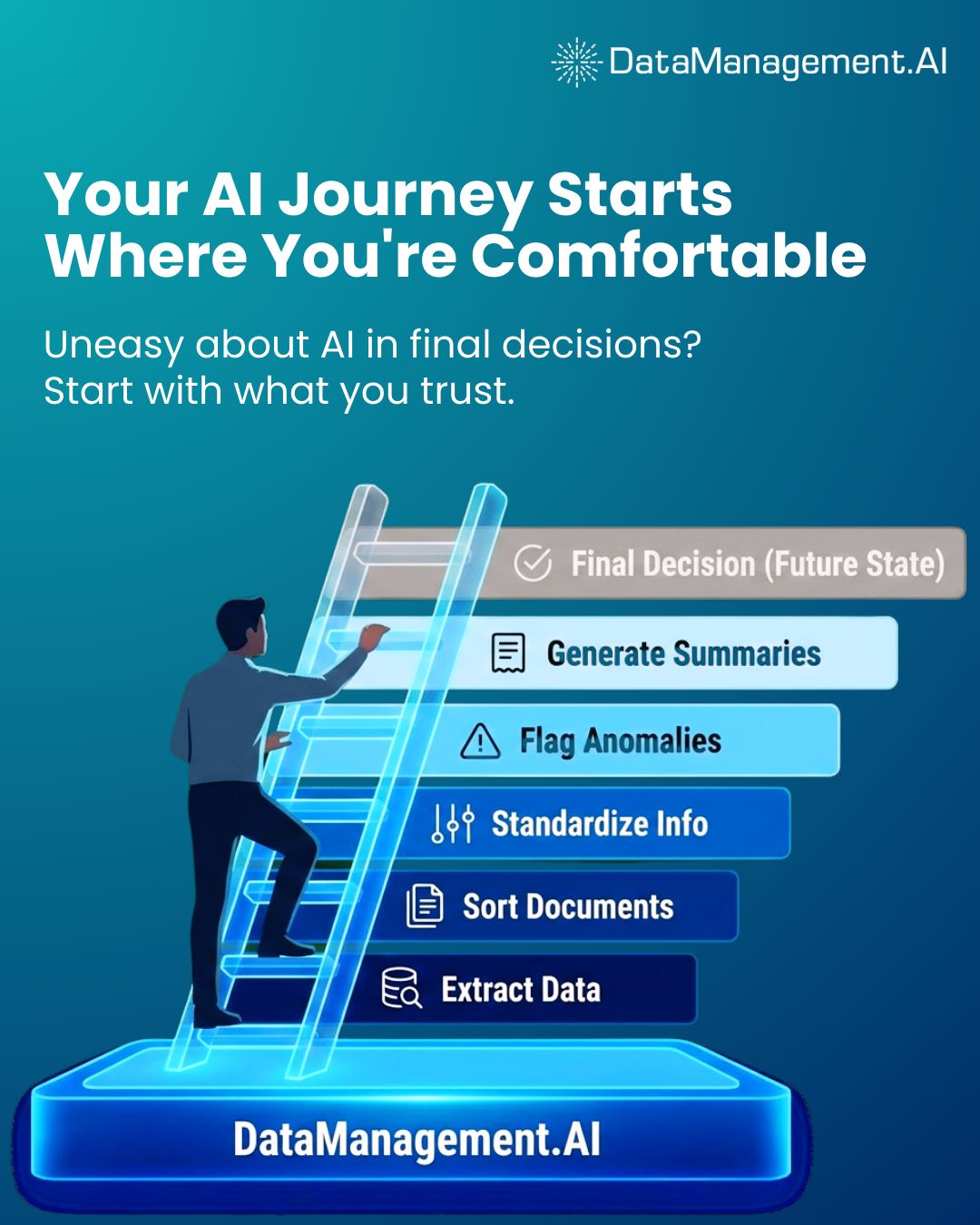

Be practical about comfort levels. If using AI in credit decisioning makes you uneasy, that shouldn't stop you from using it elsewhere. Find a comfortable level of involvement. For instance, use AI to collect and sort data for loan reviews rather than making the final decision.

Start with low-risk, repetitive tasks like extracting KYC/AML data from documents or standardizing vendor information. Experimenting here builds comfort with minimal risk.

Scaling Up Requires a Deliberate Process

Implementing GenAI requires constant feedback. Measure how long a task takes manually versus with automation. Significant time savings confirm your resources are well-spent and can spark ideas for new use cases.

These feedback loops also help identify where AI isn't a good fit. For example, at Grasshopper, we chose not to use GenAI for final credit decisions because it isn't consistently deterministic; it might reach different conclusions in similar scenarios, whereas your process requires repeatable logic.

Is the Creator-GenAI Party Ending in 2026?

For the past two years, the advertising industry has been captivated by the immense reach of the creator economy and the rapid development of generative AI. However, as we approach 2026, the initial excitement is fading, and a phase of accountability is setting in.

Historical patterns show that periods of intense media disruption eventually confront practical realities.

We witnessed this during the shift from traditional TV to the internet, which began as an unregulated "Wild West" until digital advertising standards were established. A similar moment of reckoning is now underway.

Investment in creator content and AI will not decrease, but it will soon face a non-negotiable new requirement: standardisation.

The Creator Reckoning

The unregulated landscape of creator-driven marketing is reaching a breaking point. Although ad spending in this area continues to grow, tighter economic conditions and industry shifts in 2026 will force brands to scrutinise every dollar.

Creator campaigns will no longer be exempt from rigorous return-on-investment analysis.

Brands will move beyond being satisfied with anecdotal success or surface-level metrics. Without standardised identification and metadata, they risk losing their investments in a measurement void.

By 2026, the market will rapidly transition from enthusiastic adoption to demanding mandatory accountability.

This shift is also crucial for creators. With significant brand money at stake, a creator's income depends on proving their impact. Without universal tracking standards, they cannot guarantee fair payment for the full value of their work.

The Spontaneity Paradox

A major industry misstep in 2026 would be attempting to measure creator content with old-fashioned ad tracking models.

Brands are not purchasing fixed ad slots; they are investing in a dynamic, organic ecosystem where creators film, edit, and post content in moments. This spontaneity is essential to the creator economy, but it creates a fundamental measurement challenge.

Creator content is inherently immediate and often impulsive, making it incompatible with traditional tracking. The solution requires standardisation at the very point of creation, regardless of the final format.

Effective tracking shouldn't hinder the creator's speed but should instead allow brands to identify and credit valuable spontaneous content the instant it goes live. Without this capability, the industry is operating blindly in a multi-billion-dollar ecosystem where its most impactful moments remain unmeasured.

The AI Regulatory Crossroads

At the same time, the industry is entering the age of the AI Verification Mandate. 2026 will be the year we formally address AI's role. However, the path to regulation became uncertain following a December 11 executive order.

The move to prioritise a "minimally burdensome" federal framework over existing state laws has created a risky vacuum. While legal battles unfold, businesses face a dilemma: wait for a potentially elusive federal standard or prepare for the strictest state regulations already enacted.

Given this ambiguity, brands cannot afford to halt their advertising initiatives. History suggests the strictest regulations often become the de facto national standard, as large brands cannot feasibly operate with different creative rules in every state.

Therefore, they must prepare for the most stringent scenario now.

In 2026, this means looking to two key state laws as the new baseline:

California’s AB 853: This act mandates transparency, requiring a "digital birth certificate" (metadata) that verifies an asset's origin. Without this proof of provenance, creative content may become unpublishable on major platforms unwilling to risk liability.

Colorado’s SB24-205: This law introduces an ethical layer, focusing on preventing "algorithmic discrimination." It imposes a rigorous "duty of care" on brands using AI to influence important decisions in areas like housing, credit, or employment ads.

While the federal-state conflict continues, brands will proceed with the clearest guidelines available. The regulatory message is currently confused, but one outcome is definite: if authority reverts to the states, AI's period of unrestricted use is over.

Whether judged by its origin (California) or its impact (Colorado), validation and disclosure are no longer optional future considerations; they are essential requirements for 2026.

The industry is undergoing disruption, but disruption without structure leads to chaos. The task for 2026 is to construct the layer of trust and verification these new technologies need to mature responsibly.

Standardisation is not about suppressing innovation. It is about ensuring that the "next big thing" does not fail due to a lack of oversight. Whether dealing with a creator's viral video or an AI-generated global campaign, the imperative is the same: verify, identify, and measure.

The era of an accountable, standardised digital ecosystem is beginning. It is time to move beyond unchecked spending and start building the necessary infrastructure that the market of 6 now demands.

The Open-Source Challenger: Zhipu AI Steps Up Its Bid for AI's Top Tier

Chinese AI company Zhipu AI has announced plans to intensify its work toward achieving artificial general intelligence (AGI) in the coming year, while maintaining its commitment to open-sourcing its AI models following its upcoming initial public offering.

During a Reddit AMA session, Zhipu AI researcher Zheng Qinkai stated that in 2026, the company will "contribute more substantially to the AGI journey." AGI, AI that can perform a wide variety of tasks at human-level ability, is a key objective for leading AI firms globally, including Chinese tech giant Alibaba, an investor in Zhipu.

The Beijing-based startup, which recently cleared a regulatory hearing for a listing on the Hong Kong Stock Exchange, also reassured the global developer community that it will continue releasing its model weights after going public.

Model weights are the learned parameters that define an AI model's capabilities, and sharing them is a standard practice in the open-source community.

Researcher Zeng Aoheng emphasised that Zhipu AI plans to keep contributing to open-source ecosystems, stating that the company has benefited from public technical work and aims to give back by sharing its own research and results.

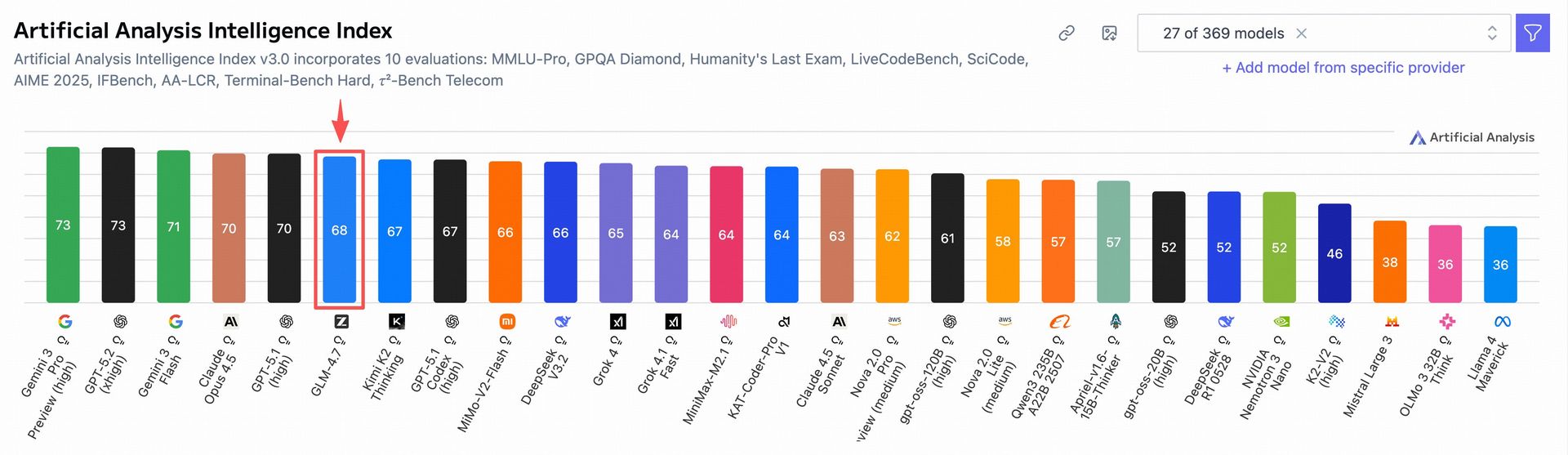

This outreach follows the release of Zhipu's latest flagship model, GLM-4.7, which was quickly met with a competing open model release from Shanghai-based rival MiniMax.

Both companies claim their new models have enhanced coding abilities that close the performance gap with U.S. leaders like Google and Anthropic.

Notably, Zhipu's GLM-4.7 recently reached a key benchmark milestone on the SWE-Bench coding evaluation, matching a score previously achieved by Anthropic's Claude model.

Zhipu is among several Chinese AI firms, including Moonshot AI and MiniMax, using platforms like Reddit to engage directly with international developers.

In the AMA, Zhipu researchers also shared that they are allocating more computing power to the pre-training phase of model development, noting it remains an effective way to boost AI performance.

The team indicated that its next major model will be named GLM-5 only when it represents a significant leap forward in capabilities.

Sam Altman's Warning Comes With a $555K Salary for AI's Hardest Job

OpenAI is advertising a high-stakes job for a “head of preparedness,” offering a salary of $555,000 per year, along with company equity.

The role’s daunting responsibilities include defending against advanced AI risks such as threats to mental health, cybersecurity, biological weapons, and the potential for AI systems to become self-training and turn against humanity.

CEO Sam Altman acknowledged the position will be highly stressful and that the candidate will need to “jump into the deep end” immediately.

The role focuses on evaluating and mitigating emerging AI threats, tracking dangerous frontier capabilities, and preparing for severe harms. Previous executives in similar positions have often had brief tenures.

The recruitment comes amid escalating warnings from AI leaders about the technology’s risks.

Microsoft AI CEO Mustafa Suleyman recently stated that anyone not “a little afraid” of AI isn’t paying attention, while Google DeepMind co-founder Demis Hassabis has warned of AIs potentially going “off the rails.”

With minimal national or international AI regulation, criticised by AI pioneer Yoshua Bengio as weaker than “sandwich” regulations, companies like OpenAI are largely self-regulating. Altman noted the challenges of measuring AI capabilities and their potential for abuse in a landscape with little precedent.

The job listing follows reports of AI-enabled cyber-attacks, including one attributed to Chinese state actors using largely autonomous AI to hack targets.

OpenAI has also reported that its latest model is significantly more capable at hacking than earlier versions and expects this trend to continue.

Additionally, OpenAI faces lawsuits alleging harm caused by ChatGPT, including a case where a teenager died by suicide after alleged encouragement from the AI and another where a user with paranoid delusions committed murder and suicide.

An OpenAI spokesperson called the latter case “incredibly heartbreaking” and stated the company is improving ChatGPT’s training to better recognise signs of distress and direct users to real-world support.

Journey Towards AGI

Research and advisory firm guiding industry and their partners to meaningful, high-ROI change on the journey to Artificial General Intelligence.

Know Your Inference Maximising GenAI impact on performance and Efficiency. | Model Context Protocol Connect AI assistants to all enterprise data sources through a single interface. |

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team