- Towards AGI

- Posts

- Is Your Job Safe?

Is Your Job Safe?

UK Banks' £1.8bn GenAI Bet Comes With Job Transformation.

Here is what’s new in the AI world.

AI news: UK Banks Eye £1.8bn GenAI Spend

Tech Crux: Open-Source AI Gets a Voice

Open AI: Why AI and Open Source Need Each Other to Thrive

OpenAI: Musk's Legal Team Asks Judge to Shield Meta Communications

Towards MCP: Pioneering Secure Collaboration in the Age of AI & Privacy

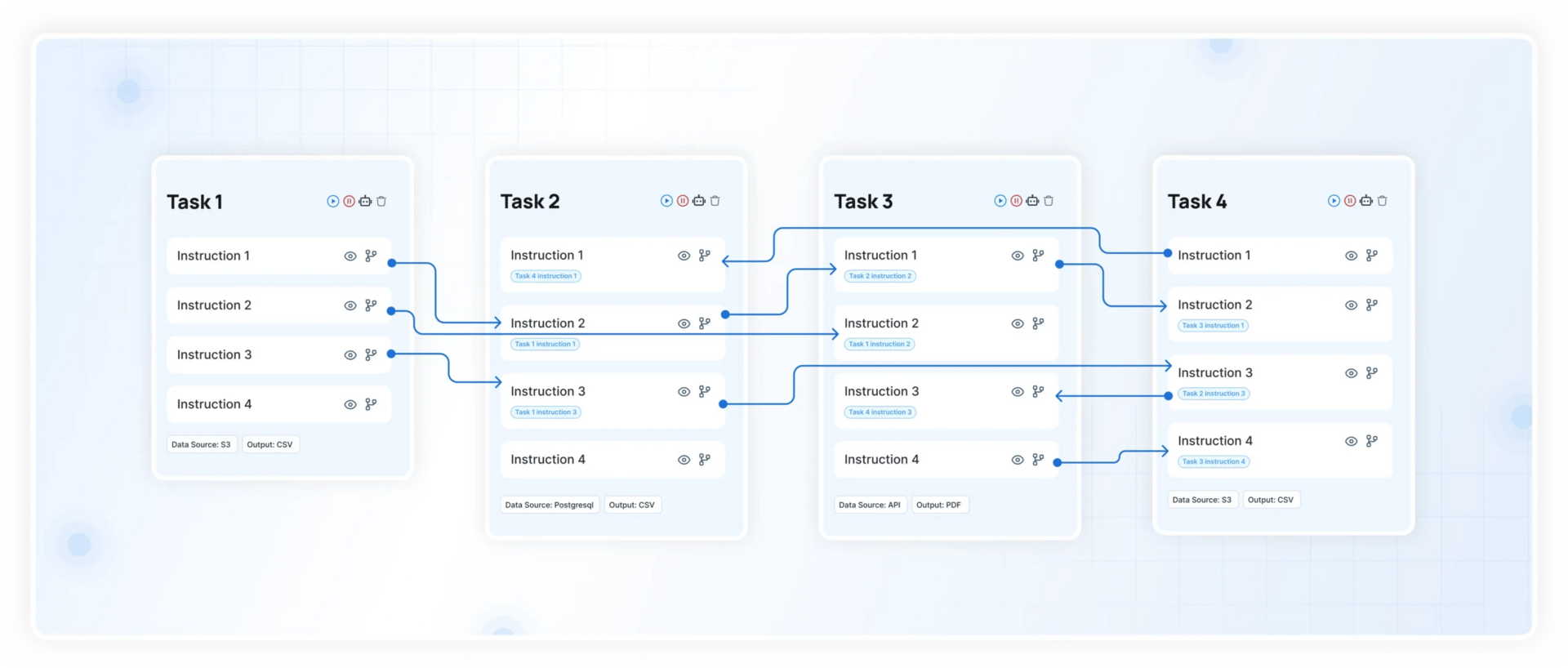

We are thrilled to announce our strategic partnership with Towards MCP, a leading Model Context Protocol platform that is transforming how businesses integrate and leverage AI.

This collaboration empowers organizations to seamlessly unlock actionable insights from their existing data ecosystems, making advanced intelligence accessible and practical for every enterprise.

UK Banking Sector Plans £1.8bn GenAI Spend, 27,000 Jobs to Be Impacted

UK banks are set to invest heavily in generative artificial intelligence, with spending projected to reach £1.8 billion (US$2.43 billion) by 2030, according to research from Zopa Bank and Juniper Research.

This strategic investment is aimed at boosting productivity, cutting operational costs, and adapting to rapidly changing customer expectations.

Key Efficiency and Labour Savings

Generative AI is expected to save 187 million hours of labour over the next five years.

82% of these efficiency gains will come from automating back-office and administrative tasks in areas like operations and compliance.

Cost savings from AI implementation are projected to equal total investment by 2030, delivering a 100% return on investment in the first deployment cycle.

Half of all cost reductions will come from streamlining back-office functions.

Workforce Impact and Transformation

An estimated 27,000 banking roles, 10% of the sector’s workforce, are expected to undergo significant changes by 2030.

Traditional banks with large branch networks and legacy IT systems will experience the greatest disruption but also stand to gain the most from automation due to their reliance on manual processes.

Digital banks like Zopa, which already use AI extensively, will face less transition disruption.

Major banks like Bank of America, Citizens, and JPMorgan are actively deploying Gen AI tools for employee productivity, but nearly 70% of AI use cases don't have any reported outcomes or measurable ROI. buff.ly/9wkkAEO | @tearsheetco

— Jim Perry (@mi_jim)

12:35 PM • Aug 28, 2025

Leadership and Strategic Vision

Peter Donlon, Chief Technology Officer at Zopa, compared generative AI to foundational shifts like the internet or cloud computing,

At Zopa, we've been operationalising machine learning for over a decade, well before LLMs became mainstream. That depth of experience has shaped our belief that GenAI isn't a feature add-on, but a foundational capability.

Zopa has already rolled out enterprise-grade generative AI tools and OpenAI training programmes, including prompt writing and custom GPT development, to all employees.

Reskilling the Banking Workforce

Zopa has launched Jobs 2030, a five-year initiative aimed at reskilling 100,000 banking workers in AI-related disciplines.

The programme will begin with training for fintech engineers, analysts, and operations staff, later expanding to include a Gen AI Engineering Programme and a Zopa Coding Academy. Training will be tested internally before a broader industry rollout.

Digital vs. Traditional Banks

Nick Maynard, VP of Fintech Market Research at Juniper Research, noted:

The UK banking sector stands at a tipping point, with Gen AI set to reshape how banking fundamentally works. Digital-only brands like Zopa already have deep experience with AI and will be less impacted by this shift.

While digital banks are positioned to lead this transformation, traditional high-street banks face greater challenges due to complex legacy systems and higher levels of manual processing.

These institutions must balance AI adoption with regulatory compliance and system constraints.

👉How are banks using Artificial Intelligence?

🔥An incredible #Infographic dated 2017!Source @Consultancy_uk

📌 #fintech#AI#govtech#finserv#ehealth#insurtech#banking#payments#GenerativeAI#GenAI#marketing#neobanks#web3#ModelOps#DevOps#TensorFlow#Python

— Enrico Molinari #VivaTech2025 (@enricomolinari)

9:33 AM • Feb 26, 2024

Customer-Facing Innovation

Zopa has already integrated generative AI across multiple functions, including customer query handling, vulnerability detection, and autonomous coding agents.

The bank also plans to introduce an AI Voice Assistant to improve customer interactions.

Clare Gambardella, Chief Customer Officer at Zopa, emphasised the customer benefits:

Generative AI marks a once-in-a-generation shift, redefining the future of work and the skills that power it. With Gen AI, we have the opportunity to make the customer experience more intuitive, personalised, and intelligent than ever before.

This wave of AI adoption signals a broader transformation within UK banking, one that prioritises efficiency, customer personalisation, and large-scale workforce reskilling.

As financial institutions navigate this shift, platforms like DataManagement.AI are becoming essential, providing the intelligent data governance and integration tools needed to turn AI potential into tangible outcomes, all while keeping data secure, accessible, and actionable across the organization.

Join the industry leaders who are already achieving smarter insights, significant cost savings, and unprecedented efficiency with AI-powered data management.

Can Microsoft VibeVoice Replace Podcasters? Let’s Find Out

If you're exploring the future of AI-powered voice technology, Microsoft’s latest release, VibeVoice-1.5B, is something you’ll want to know about.

This isn’t just another text-to-speech tool; it’s an open-source model built to generate expressive, long-form, and multi-speaker audio under a flexible MIT license.

Pasted my script.

Whether you're a researcher, developer, or creator, this model opens new doors for synthetic voice applications, from audiobooks and podcasts to multilingual and singing synthesis.

Generating voices.

Why This Model Stands Out?

Here’s what makes VibeVoice-1.5B a game-changer for you,

Extended Audio Generation: You can now create up to 90 minutes of continuous, natural-sounding speech in a single session, far beyond what most open TTS models offer.

Multi-Speaker Support: You can generate conversations with up to four distinct voices at once, complete with realistic turn-taking, ideal for dialogue-heavy content.

It has various vocal options.

Cross-Lingual and Singing Ability: While optimized for English and Chinese, you can experiment with cross-lingual synthesis and even generate singing, a rare capability in open TTS.

Open and Free to Use: Released under an MIT license, you’re free to use, modify, and even commercialize projects built with this model.

Emotionally Expressive Output: You can produce speech with nuanced emotional tones, making it suitable for narrative and conversational applications.

What Are Its Limitations?

Before you dive in, be aware of a few limitations,

The model currently supports only English and Chinese; using other languages may produce poor or unintended results.

It is only bilingual.

It does not support overlapping speech (interrupting speakers), and it generates speech only, no background music or sound effects.

Microsoft prohibits misuse such as voice impersonation, disinformation, or bypassing authentication systems. You must disclose AI-generated content where required.

This version isn’t built for real-time use; it’s aimed at research and offline applications. A more powerful 7B streaming version is expected soon.

Why It Matters for Your Work?

VibeVoice-1.5B represents a major step forward in making high-quality, multi-voice TTS accessible. Whether you’re developing voice assistants, creating audio content, or advancing speech synthesis research, this model offers a scalable, open foundation to build upon.

While it’s research-focused for now, its capabilities hint at a future where generating natural, expressive, and long-form speech is easier than ever.

AI Projects Can't Kill Open Source Because They Depend on It

The rise of AI is reshaping the tech job market, particularly for new computer science graduates. Some companies believe AI can replace junior developers, contributing to a sharp downturn in entry-level hiring.

At the same time, AI is accelerating the volume of code and pull requests in open source, suggesting to some that fewer human developers may be needed in the future.

However, this perspective overlooks a critical reality: while AI can generate code, it often falls short in quality, creativity, and problem-solving compared to skilled engineers.

This sentiment was echoed by Jim Zemlin, executive director of the Linux Foundation, during his keynote at Open Source Summit Europe.

The struggles of computer science graduates, including one talented individual who, after sending hundreds of résumés, could only secure a job at Chipotle, unrelated to coding.

Zemlin also cited AWS CEO Matt Garman, who called the idea of replacing junior developers with AI “the dumbest thing I ever heard of,” emphasizing that junior workers often bring fresh perspectives and play crucial roles within companies.

From #OSSummit: Jim Zemlin's keynote.

— Dakshitha Ratnayake (@techieducky)

7:59 AM • Aug 25, 2025

Despite these challenges, the role of developers is undeniably evolving. AI’s potential is too significant to ignore, but its integration should enhance, not replace, human capability.

As Zemlin urged, the conversation should focus on “how to leverage and harness the power of AI to make our communities stronger,” ensuring that open source remains driven by “hyper-productive” developers rather than empty rooms.

VC Investment and Open Source’s Superior Performance

Despite AI’s disruptive force, venture capital continues to flow into open source. According to the State of Commercial Open Source 2025 Report by the Linux Foundation, Serena VC, and the Commercial Open Source Startup Alliance (COSSA), aggregate funding for commercial open source (COSS) startups reached $26.4 billion in 2024.

These startups significantly outperform closed-source peers with,

7x higher valuations at IPO

14x greater valuations in mergers and acquisitions

Matthieu Lavergne, partner at Serena VC and co-author of the report, emphasized that open source is not just viable but a “superior” strategy, especially for infrastructure software.

AI’s Challenges and Open Source’s Enduring Strength

AI introduces new complexities for open source maintainers. Daniel Stenberg, founder of the widely used cURL project, described the struggles of maintaining a critical tool amid AI-generated contributions.

Yet, he remains optimistic, believing open source will grow stronger because of these challenges.

We are super excited to release OpenCUA — the first from 0 to 1 computer-use agent foundation model framework and open-source SOTA model OpenCUA-32B, matching top proprietary models on OSWorld-Verified, with full infrastructure and data.

🔗 [Paper] arxiv.org/abs/2508.09123

📌— Xinyuan Wang (@xywang626)

4:59 PM • Aug 15, 2025

Pallavi Priyadarshini, engineering head at Amazon OpenSearch Service, noted that open source is more important than ever in the AI era,

The innovations are so rapid that no one company or team can keep up….The beauty of open source is that foundational blocks are available out of the box, and contributors continuously improve them.

The narrative that AI will eliminate the need for developers is not only premature but misguided. Instead, AI should be viewed as a tool that, when combined with human expertise, can drive unprecedented innovation in open source and beyond.

The future will likely belong to those who can integrate AI thoughtfully while nurturing the next generation of developers.

In OpenAI Feud, Musk Seeks to Bury a Paper Trail Linked to Meta

Elon Musk's attorneys have formally requested that a U.S. judge prevent OpenAI from acquiring documents from Meta Platforms concerning Musk's previous bid for OpenAI's assets.

This legal maneuver represents the latest development in the ongoing dispute between the billionaire entrepreneur and the artificial intelligence research organization.

The controversy stems from revelations that Musk had approached Meta CEO Mark Zuckerberg earlier this year to participate in a substantial $97.4 billion offer for OpenAI's assets.

According to OpenAI's statements, Zuckerberg ultimately declined to join this acquisition attempt. Following these disclosures, OpenAI sought court authorization to obtain Meta's records and communications related to any potential bidding activities for their company.

Elon Musk played a significant role in the creation of OpenAI at the time he was concerned that Google was not paying attention to the A.I. Safety.

But Sam Altman transformed this open-source, non-profit company into a closed-source, for-profit company. He cannot be trusted.

— DogeDesigner (@cb_doge)

12:26 AM • Jul 21, 2025

In response to OpenAI's request, Meta maintained that the AI firm should pursue these documents directly from Musk and his artificial intelligence startup, xAI, rather than through Meta's records.

Musk's legal representatives supported this position, asserting that OpenAI had already received all pertinent documentation from Musk and xAI regarding the bid matter. They characterized OpenAI's additional discovery efforts as excessively broad and unrelated to the current stage of legal proceedings.

OpenAI's legal team countered these arguments, denying that their document requests were expansive or unreasonable. They emphasized that their discovery efforts were specifically targeted and covered a limited timeframe.

The organization's lawyers further explained that if Musk's communications regarding the bid were primarily verbal rather than documented, as has been suggested, then conducting depositions with Musk, an xAI representative, and other potential bidders becomes particularly necessary for establishing the facts of the case.

Elon Musk played a key role in the creation of OpenAI, even coming up with the name “OpenAI” to reflect its commitment to being open source.

But Sam Altman transformed this open-source, non-profit company into a closed-source, for-profit company.

— DogeDesigner (@cb_doge)

12:59 AM • Jun 17, 2025

This legal conflict originated when Musk initiated litigation against OpenAI and its CEO, Sam Altman, in 2023, challenging the company's transition to a for-profit business model.

OpenAI filed a countersuit in April 2025, alleging that Musk had engaged in a campaign to damage the company through various means, including public statements, social media posts, legal claims, and what they characterize as a disingenuous acquisition offer.

A U.S. District Judge has ruled that Musk must address these allegations, with a jury trial scheduled to commence in spring 2026.

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How did you like our today's edition? |

Thank you for reading

-Shen & Towards AGI team