- Towards AGI

- Posts

- Is Microservices Overkill? The Cons of Distributed Architecture in AI

Is Microservices Overkill? The Cons of Distributed Architecture in AI

The GenAI Microservices Dilemma.

Here is what’s new in the AI world.

AI news: How Microservices Help and Hinder Generative AI

Hot Tea: The AI Image Pecking Order Shifts

Open AI: €58M Boost for AI Threat Defense

OpenAI: AI Frenzy: Bubble or New Normal?

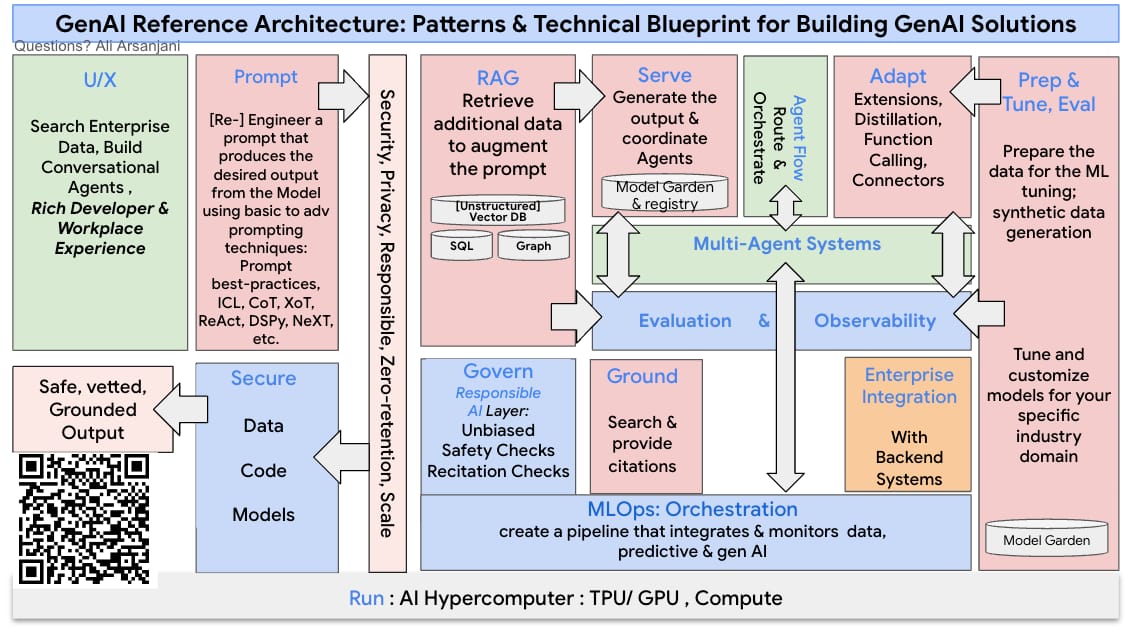

Agility vs. Complexity: The Microservices Trade-Off in GenAI Architectures

You've probably seen the stats: nearly 40% of the U.S. population now uses generative AI, and 24% of workers are using it on the job. This explosive growth has sparked a heated debate about the best way to build these systems.

While microservices are often assumed to be the natural fit, you need to look beyond the trend and ask: where does the real value lie for your project?

The Core Choice: Monolith vs. Microservices

The value of your software architecture boils down to cost, both upfront and ongoing. For your generative AI project, starting with a monolith is often more budget-friendly, quicker, and simpler.

You have fewer technologies to learn, less operational complexity, and only one application to manage. In the early stages, this simplicity is a strategic advantage, allowing you to develop features and test changes rapidly.

However, as your AI system grows, the monolithic approach can begin to hold you back. The cost of making updates increases, risks multiply as the codebase expands, and redeploying the entire system for a small change becomes routine.

This slows innovation and raises the risk of outages. Debugging and testing also become much more challenging.

For an early-stage GenAI project, which architecture is a better starting point? |

Switching to microservices initially increases your costs. You'll need to invest in orchestration platforms, secure networks, observability tools, and complex CI/CD pipelines.

The required skills in containerization and distributed systems are expensive to hire for or learn. This complexity is the entry fee you pay for future benefits like flexibility and rapid scaling.

To justify it, you must have a clear, lasting need to evolve parts of your system independently.

Where Microservices Empower You

Microservices shine when rapid, independent evolution isn't just a convenience but a necessity for you. If your generative AI system must constantly integrate new models, run parallel experiments, or offer real-time analytics, this modularity becomes a key advantage.

You can update an inference engine or swap a component quickly with less risk to the whole system.

You'll also benefit from superior scalability. If your system faces fluctuating demand, microservices let you scale specific parts, like model inference or data retrieval, without overprovisioning your entire stack. This optimizes your resource use and aligns costs with actual usage.

Resilience is another major advantage. In a monolith, a minor fault in one pipeline can take down your entire service. Microservices contain failures, allowing you to perform targeted rollbacks and keep the platform running even when parts are degraded.

Furthermore, microservices support modern development practices. Your teams can release updates, run tests, and perform rollbacks independently, leading to shorter, less risky innovation cycles and a faster response to user feedback.

Where Microservices Can Hold You Back

Not every project needs microservices. If you're on a small team, building a focused app, or running a proof-of-concept, the added complexity can easily outweigh the benefits.

If your generative AI solution is stable with few model updates, a simple monolith is faster to develop, less error-prone, and easier to manage. The cost of maintaining distributed systems can create more operational burden than advantage.

What's the biggest trigger for a GenAI project to switch from a monolith to microservices? |

A lack of in-house expertise in distributed systems amplifies these challenges. The steep learning curve can lead to inefficiencies, outages, and technical debt. Even in larger teams, microservices can reduce accountability.

Adding network calls between services increases latency and creates new failure points. Debugging a performance issue across multiple services is far more complex than within a single monolith.

Making a Value-Driven Decision for Your Architecture

Ultimately, the right architecture depends entirely on your context. You should choose microservices when your generative AI system is ambitious, rapidly evolving, and requires resilience, scalability, and fast experimentation.

However, if your project has stable needs, a slower pace of change, or a strong preference for operational simplicity, you might see little benefit from microservices and could even face negative outcomes.

Your decision should be based on your organization's specific needs, resources, and expertise. By focusing on these value drivers, you can ensure your generative AI platform delivers long-term benefits, rather than just becoming another tech fad.

However, no matter which architectural path you choose, the success of your generative AI system hinges on one common, critical element: high-quality, well-managed data.

Whether you're operating a monolithic prototype or a complex mesh of microservices, flawed data will undermine your model's performance, scalability, and reliability.

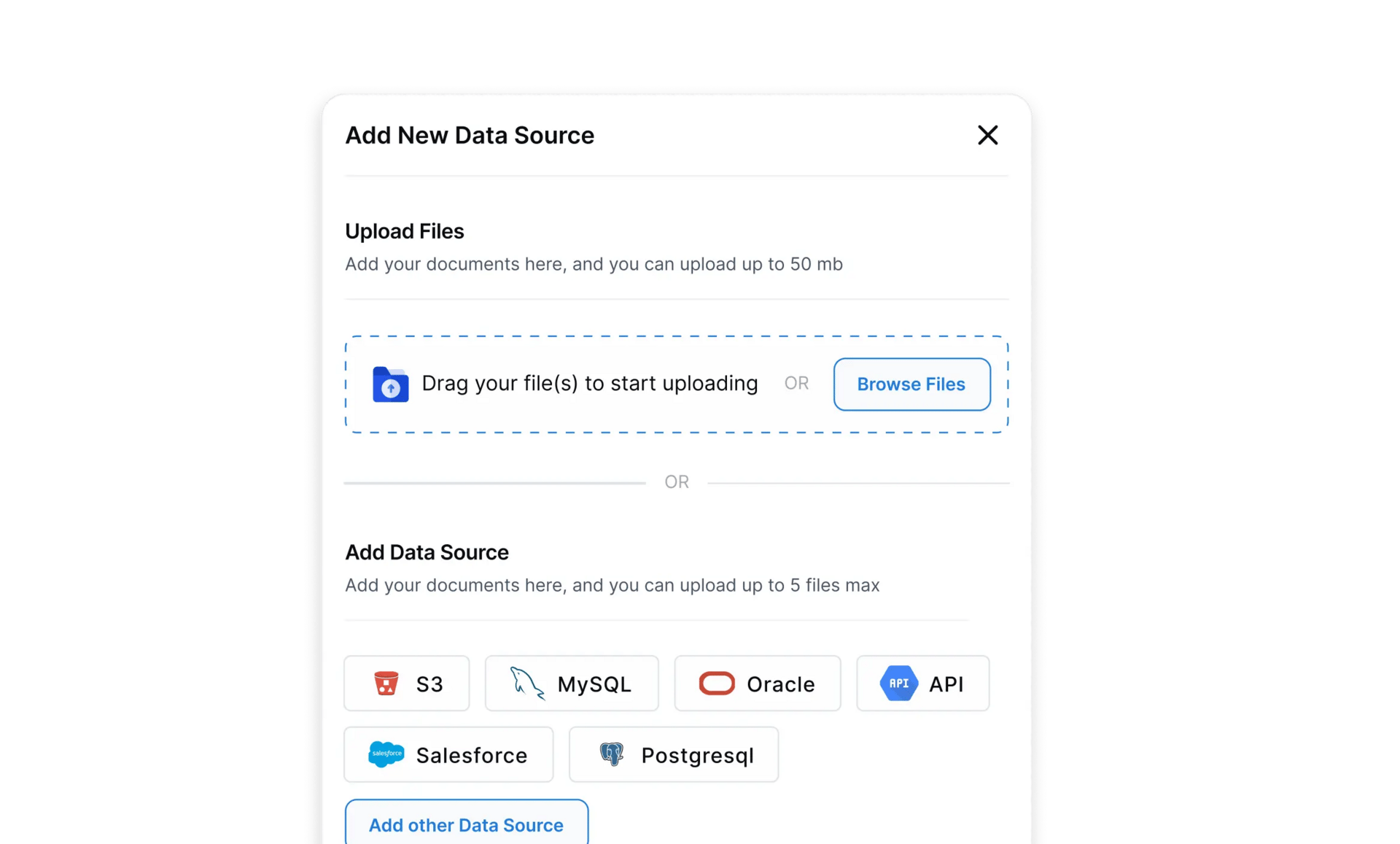

This is where a strategic approach to data management becomes your most significant competitive advantage. A platform like DataManagement.AI is specifically designed to tackle this core challenge.

It provides the essential foundation for both monolithic and microservices architectures by enabling you to:

Systematically manage your AI data lifecycle, from collection and labeling to validation and versioning.

Ensure data quality and consistency across all your services, preventing the "garbage in, garbage out" problem that plagues AI initiatives.

Implement robust data governance, tracking lineage, and ensuring compliance, which is crucial when deploying AI at scale.

By integrating a data-centric philosophy from the start, you empower your chosen architecture to perform at its best. DataManagement.AI helps you build that solid data foundation, ensuring your investment in AI, whether in a simple monolith or a distributed system, delivers breakthrough value and stands the test of time.

Tencent Hunyuan Image 3.0 Surpasses Midjourney v6 and DALL-E 3 in Key Benchmark

Tencent's Hunyuan Image 3.0 has taken the lead in the text-to-image AI field, surpassing Google DeepMind's flagship model, Gemini 2.5 Flash Image (Nano Banana), on the prominent LMArena benchmark.

This achievement is significant because it demonstrates that open-source AI can now compete directly with the top-tier, proprietary models developed by industry giants.

The model is the largest of its kind in the open-source community, boasting 80 billion parameters.

We are excited to introduce Hunyuan-Vision-1.5-Thinking, our latest and most advanced vision-language model. Hunyuan-Vision-1.5-Thinking is ranked No. 3 in @arena, and the model is now available on Tencent Cloud. The model and technical report will be released in late October.

— Hunyuan (@TencentHunyuan)

1:52 AM • Oct 7, 2025

These parameters are the core components of an AI's knowledge and capability; a higher count generally indicates a more powerful and nuanced model, though it requires substantial computing power to run.

Tencent states that Hunyuan Image 3.0 is "completely comparable" to the best closed-source models available.

Visual examples of its output, such as a detailed image of a Star Ferry-inspired spacecraft traveling through a wormhole, showcase its advanced ability to interpret and generate complex scenes from text descriptions.

This milestone sets a new benchmark for scale and performance in open-source AI. It provides developers and researchers with a powerful, publicly accessible tool and challenges the long-held dominance of proprietary AI technologies, signaling a major shift in the competitive landscape.

But to truly challenge the status quo, these powerful open-source models need to be seamlessly integrated into the applications and workflows where they can deliver real-world value.

The next frontier isn't just building better models; it's building smarter systems that can dynamically connect models, data, and tools to act autonomously.

This is where the future of AI is headed, and Towards MCP is at the forefront. As a thought leadership platform dedicated to navigating the path to Artificial General Intelligence, Towards MCP provides the essential insights and frameworks for this next leap.

It explores how protocols like the Model Context Protocol (MCP) are creating a universal standard for AI interoperability, allowing open-source giants like Hunyuan to work seamlessly with other models, data sources, and applications.

Filigran's €58M Round for Open-Source AI Threat Intelligence

In an era where AI-powered threats are escalating, the value of threat intelligence has never been greater. However, organizations struggle to afford, process, and act on this data in real time.

French cybersecurity startup Filigran is addressing this exact challenge, helping enterprises unlock the full potential of threat intelligence to prioritize alerts, investigate incidents, and optimize their security tools.

The company has just announced a $58 million Series C funding round, led by Eurazeo with participation from Deutsche Telekom and existing investors Accel and Insight Partners.

$58M Series C raised! 🚀 Led by @eurazeo , with Deutsche Telekom (T.Capital), @Accel & @insightpartners . Funding fuels XTM Suite expansion, new platforms & global growth.

👉 C what’s next: filigran.io/fueling-our-ne…

#SeriesC#Cybersecurity

— Filigran (@FiligranHQ)

8:00 AM • Oct 6, 2025

This latest injection brings Filigran's total capital raised to over $100 million, following a Series A round last year and a seed round in 2023.

Expansion and Strategic Goals

This new capital will fuel a significant expansion of Filigran's product suite and global presence. Key initiatives include:

Launching OpenGRC: A new platform designed to help organizations prioritize cyber risks and take the most effective preventive actions, bridging the critical gap between threat detection and strategic decision-making.

Building an Agent Platform: Developing a specialized platform to enhance real-time capabilities and deepen integrations across its ecosystem of tools.

Global Market Expansion: Strengthening its foothold in the US and Europe while entering new markets in the Pacific and Middle East, signaling its ambition to become a global leader.

A Next-Generation, Open-Source Approach

Founded in 2022 by Samuel Hassine and Julien Richard, Filigran builds innovative open-source solutions to help organizations anticipate cyber risks.

Hassine brings over 15 years of experience from agencies like the French National Agency for the Security of Information Systems (ANSSI), while Richard contributes more than 20 years of expertise in big data and AI product engineering.

The company's core mission is to reduce the barriers to threat intelligence adoption. Its open-source model fosters a collaborative community where enterprises, governments, and researchers can collectively contribute to and benefit from shared cyber intelligence.

This approach has led to rapid adoption, with over 6,000 organizations, including high-profile clients like the FBI and the European Commission, now relying on Filigran's tools to protect their sensitive data and operations.

Core Technology: OpenCTI and OpenBAS

Filigran's success is built on its flagship open-source platforms:

OpenCTI: A threat intelligence platform that aggregates and analyzes data from sources like CrowdStrike. It helps security teams worldwide detect, understand, and respond to threats faster. Its popularity is proven by millions of downloads.

OpenBAS: A simulation platform that replicates real-world cyberattacks, allowing organizations to proactively test their resilience and identify vulnerabilities before they can be exploited.

Together, these tools embody Filigran's proactive strategy: empowering organizations to stay ahead of threats rather than just reacting to them.

As CEO, Samuel Hassine stated, “This Series C round is an important milestone... It reflects everyone’s commitment to achieving our mission, which is to make threat intelligence accessible and actionable for all organisations worldwide.”

OpenAI's Simo Says AI Frenzy is Rational and Enduring

In her first interview since becoming Chief Operating Officer, OpenAI's Fidji Simo pushed back against concerns that the massive spending on AI infrastructure is a bubble, arguing it is instead the "new normal" required to meet explosive user demand.

Simo stated that the current investments in computing power, or "compute," are a direct response to a severe shortage that is limiting the company's ability to serve all the potential use cases for its technology.

She pointed to the high demand for its video generator, Sora, as a prime example.

“From that perspective, I really do not see that as a bubble. I see that as a new normal, and I think the world is going to switch to realizing that computing power is the most strategic resource."

On Addressing AI's Risks and Societal Impact

When asked about the dangers of AI, Simo framed her role as ensuring the technology's benefits are realized while its downsides are mitigated. She highlighted several key areas:

Mental Health: Simo noted that many users already turn to ChatGPT for mental health advice when other resources are unavailable. To ensure safety, OpenAI has introduced parental controls and is developing "age prediction" technology. This would allow the platform to provide a more restricted and safer version of ChatGPT to teenagers.

Jobs: Acknowledging that AI will impact certain professions, Simo emphasized that it will also create new roles, such as prompt engineering. She stated that OpenAI sees its role as helping to manage this transition for the workforce.

The Next Frontier: Proactive AI

Looking ahead, Simo described the next major breakthrough as AI that moves beyond answering questions to proactively understanding and accomplishing user goals.

Every company wants to deploy agents.

Every company finds it cumbersome.

We’re addressing that with AgentKit!— Fidji Simo (@fidjissimo)

6:44 PM • Oct 6, 2025

She illustrated this with an example: an AI that, upon learning a user wants to spend more time with their spouse, would not only suggest weekend getaways but also handle all the planning and make reservations, requiring only a single tap of approval.

On Global Competition and Regulation

Addressing the adage that "America innovates, China copies, Europe regulates," Simo, who is French-born, admitted the saying breaks her heart. She expressed that Europe has focused "a little too much" on regulation.

Regarding China, she stressed the importance of maintaining a competitive lead, noting that China is investing heavily in AI innovation and computing power. She argued it is "incredibly important" for democratic nations to continue advancing AI that reflects their values.

A Personal Touch: Letting Her Child Use ChatGPT

While ChatGPT's terms of service require users to be 13 or older, Simo allows her 10-year-old daughter to use it under supervision. She described the experience as "magical," noting how her daughter used the tool to create banners and taglines for an imaginary business.

"In our childhood, we couldn't turn our imagination into something real that fast," Simo said. "And I see that really giving her superpowers, where she thinks anything is possible."

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team