- Towards AGI

- Posts

- Is GenAI the Last Use Case We'll Ever Need to Invent?

Is GenAI the Last Use Case We'll Ever Need to Invent?

The End of the Roadmap?

Here is what’s new in the AI world.

AI news: The Infinite Use Case Machine

Open AI: DeepSeek-OCR Takes GitHub by Storm

Hot Tea: OpenAI's Secret Wall Street Automation Army

OpenAI: The OpenAI Search Engine is Here

How GenAI Becomes the Engine of Innovation

You've likely heard the debate: Is AI a bubble about to burst? In your position, you need to think beyond the hype. AI is not a bubble; it's a permanent shift, and for your enterprise, the most transformative application isn't a single function, it's Generative AI.

But you must look past the chatbots and summarization tools. For you, Generative AI should be a foundational capability, a core utility that will power every other intelligent application across your organization.

Your Personal Experience is the Preview

You probably use tools like ChatGPT or Gemini daily. They've become extensions of your own workflow for drafting, researching, and brainstorming.

This is GenAI's first power: democratization. It puts a digital knowledge worker at everyone's fingertips. Once your team experiences this leap in capability, there's no going back.

The Real Enterprise Shift Happens Inside Your Firewall

You might be experimenting with enterprise versions of Copilot or ChatGPT. These are useful starting points, but they are only the surface. The real transformation begins when you stop renting AI and start building it.

Tech is reshaping our societies + economies. Without strategic investment, we risk reinforcing inequality.

3 priorities to transform this risk into opportunity:

1. Invest in connectivity infrastructure

2. Make digital services affordable

3. Ensure everyone has AI skills.

#MWC25— Doreen Bogdan-Martin (@ITUSecGen)

1:35 PM • Oct 21, 2025

Imagine deploying an open-source model like Llama or Mistral and training it exclusively on your company's data, every process document, policy manual, and technical spec. The result isn't a chatbot; it's an intelligent core that knows your business better than any consultant.

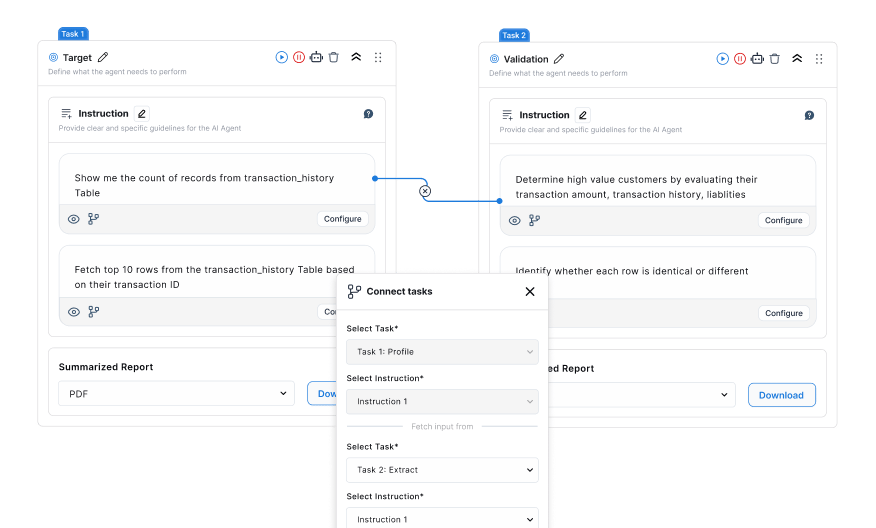

This becomes a factory for use cases, not just a single tool. From this one foundation, you can spawn:

An analytics assistant for your leadership team that explains data in plain English.

A real-time trainer for your field staff, guiding them through complex procedures.

An auditor who reviews thousands of compliance documents in hours.

A recruiter who understands cultural fit beyond keywords.

These aren't separate, expensive projects. They are all outputs from the same centralized GenAI brain you've built.

Why You Must Build Your Own AI Foundation?

You might wonder why you shouldn't just keep using off-the-shelf solutions. The answer lies in control, security, and adaptability.

If AI is to become the core of your operations, you cannot risk your most sensitive data leaving your environment. You need predictable performance and the ability to tailor the AI's knowledge precisely to your evolving needs. You need to own the intelligence, not just rent it.

What You Need to Get Right to Succeed

Building this foundation isn't plug-and-play. For your GenAI initiative to succeed as a true use-case factory, you must focus on three pillars:

Your Data Readiness: Your AI will only be as smart as the data you feed it. If your knowledge is siloed, messy, or inaccessible, your AI will be unreliable. Garbage in, garbage out has never been more consequential.

Your Governance & Security: An open-source model trained on your proprietary data creates new risks. You must implement strong guardrails for privacy, compliance, and intellectual property from day one.

Your Leadership & Vision: This is the most common failure point. You cannot treat this as just another IT project. You need a clear strategic vision: Why are we doing this? What business outcomes are we driving? Without your leadership, the initiative will fragment and underdeliver.

Think of integrating GenAI like hiring a new, incredibly capable executive team. If you bring them in without a clear mandate, proper training, or alignment with your vision, they will fail.

Train your AI on the right data, govern it properly, and align it with your strategy, and it will perform like your most valuable internal team.

For your enterprise, Generative AI is a dual-force multiplier. It is both a powerful use case and the factory that produces all future use cases.

🚨 New study reveals that when used to summarize scientific research, generative AI is nearly five times LESS accurate than humans.

Many haven't realized, but Gen AI's accuracy problem is worse than initially thought.

— Luiza Jarovsky, PhD (@LuizaJarovsky)

3:36 PM • May 19, 2025

If you treat it as a one-off project with a fixed budget and timeline, you will fail. This is a continuous, evolving capability, much like developing new products or entering new markets.

You need to define a yearly budget to keep building and evolving your enterprise AI.

The question is no longer if you should adopt it, but how you will build it with clarity, purpose, and control. The organizations that get this right won't just adapt to the future, they will define it.

This is precisely where DataManagement.AI becomes your strategic partner. Our platform provides the essential control plane to ensure your annual AI investment delivers maximum ROI.

By unifying your data foundation with intelligent governance and observability, DataManagement.AI gives you the clarity to prioritize projects, the purpose to align AI with business outcomes, and the control to scale confidently.

We help you transform your budget from an IT expense into a strategic engine for growth.

A new open-source AI model called DeepSeek-OCR is challenging fundamental assumptions about how large models should process information.

Since its release, the project has gained massive traction, amassing over 4,000 GitHub stars overnight and capturing the attention of leading AI experts.

Revolutionary Approach: Visual Information Compression

DeepSeek-OCR’s breakthrough lies in its visual approach to handling text. Rather than treating text as discrete tokens, the model processes it as images, achieving unprecedented information compression.

According to its creators, it can compress a 1,000-word article into just 100 visual tokens with 97% accuracy, a tenfold efficiency gain.

🚨 DeepSeek just did something wild.

They built an OCR system that compresses long text into vision tokens literally turning paragraphs into pixels.

Their model, DeepSeek-OCR, achieves 97% decoding precision at 10× compression and still manages 60% accuracy even at 20×. That

— God of Prompt (@godofprompt)

11:22 AM • Oct 20, 2025

This method is not only highly accurate but also incredibly efficient: a single NVIDIA A100 GPU can process 200,000 pages of data per day using this approach, potentially signaling a major shift in how future models handle input.

Industry Leaders See a Paradigm Shift

The model received significant validation from Andrej Karpathy, former Tesla AI lead and OpenAI co-founder, who highlighted its most “interesting” aspect: a computer vision AI effectively “masquerading as a natural language person.”

Karpathy advocates for this visual-first method as a superior input system for large language models (LLMs), suggesting that all inputs, including plain text, should be rendered as images first.

He argues this approach offers better compression, more generalized information flow, and solves persistent problems with traditional “word segmenters” (tokenizers), which he describes as “ugly and standalone” while introducing Unicode issues and security vulnerabilities.

Access and Implementation

DeepSeek-OCR is publicly available on GitHub and Hugging Face (deepseek-ai/DeepSeek-OCR). The 3-billion-parameter model can be downloaded and run using the Hugging Face transformers library, with code examples provided for NVIDIA GPU inference.

The repository also includes guidance for PDF processing and model acceleration using vLLM, making it readily accessible for both researchers and developers looking to experiment with this novel approach to text processing.

OpenAI's Hiring Spree of 100 Finance Veterans

OpenAI is recruiting former investment bankers from elite firms like JPMorgan Chase, Morgan Stanley, and Goldman Sachs to train its AI in performing core financial tasks.

The initiative, internally known as Project Mercury, aims to automate the labor-intensive work typically handled by junior analysts, such as building complex financial models for IPOs, restructurings, and leveraged buyouts.

The company has hired over 100 ex-bankers who are paid approximately $150 per hour to write prompts, test AI outputs, and refine the models.

These contractors also receive early access to OpenAI's proprietary financial modeling tools, which are being developed to replicate and eventually streamline the meticulous manual work that defines the early years of an investment banking career.

OpenAi has hired 100+ former bankers from JPMorgan, Goldman Sachs, Brookfield, Evercore as well as Harvard MBAs to train a financial-modelling tool:

> $150 an hour to build models for IPOs, restructuring etc.

> Three step process: 1) 20-minute interview with AI chatbot; 2) test— Bearly AI (@bearlyai)

3:12 PM • Oct 21, 2025

This push comes as OpenAI, despite its recent $500 billion valuation, continues to seek profitable commercial applications for its technology. The finance sector, along with consulting and law, represents a key area for monetization.

The move directly targets one of Wall Street's most notorious pain points: the endless hours junior analysts spend in spreadsheets and presentation decks.

This repetitive workload has long been a source of industry frustration, famously captured in Wall Street's "pls fix" meme culture. The automation of these tasks is now generating both interest and anxiety about the future of entry-level banking roles.

Notably, the hiring process for Project Mercury itself is automated. Candidates undergo a 20-minute AI chatbot interview followed by financial modeling tests.

Those selected must submit one model weekly, with their vetted work being integrated directly into OpenAI's evolving financial AI systems.

OpenAI's New Browser That's Shaking Up the Search Market

OpenAI has entered the competitive web browser market with the launch of ChatGPT Atlas, a new browser built around its generative AI technology.

While the interface resembles traditional browsers, its core functionality integrates ChatGPT directly into the browsing experience, allowing users to generate summaries, ask questions, and complete tasks on any webpage without switching tabs.

Meet our new browser—ChatGPT Atlas.

Available today on macOS: chatgpt.com/atlas

— OpenAI (@OpenAI)

5:20 PM • Oct 21, 2025

The announcement positions OpenAI in direct competition with Google's Gemini-infused Chrome and Perplexity AI's Comet browser. The market reaction was immediate, with shares of Google's parent company, Alphabet, falling roughly 3% following the news.

Key Features of ChatGPT Atlas

Integrated ChatGPT Access: An "Ask ChatGPT" button appears on every webpage, opening a sidebar where users can interact with the AI without interrupting their browsing.

Persistent Memory: The browser remembers user interactions and becomes more personalized over time, enabling functions like searching through web history with natural language prompts.

Agent Mode: For ChatGPT Plus and Pro subscribers, the AI can perform actions like booking flights, making reservations, shopping, and editing documents autonomously. Users can watch the AI navigate the web on their behalf.

Seamless Workflow: Eliminates the need to copy and paste content between tabs, as ChatGPT maintains context across all browsing activity.

During the livestream announcement, OpenAI CEO Sam Altman described AI as a "rare once-a-decade opportunity to rethink what a browser can be," while Product Lead Adam Fry emphasized how Atlas creates a continuous, personalized browsing experience.

Currently available worldwide for macOS users, with Windows, iOS, and Android versions promised soon, Atlas represents OpenAI's ambitious move to redefine how users interact with the internet.

As Altman noted, "There's a lot more to add. This is still early days for this project."

Journey Towards AGI

Research and advisory firm guiding industry and their partners to meaningful, high-ROI change on the journey to Artificial General Intelligence.

Know Your Inference Maximising GenAI impact on performance and Efficiency. | Model Context Protocol Connect AI assistants to all enterprise data sources through a single interface. |

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team