- Towards AGI

- Posts

- How NVIDIA is Scaling AI Factories to Gigawatt Power Levels?

How NVIDIA is Scaling AI Factories to Gigawatt Power Levels?

NVIDIA's Next Big Build

Here is what’s new in the AI world.

AI news: NVIDIA's Gigawatt AI Factories for Rubin

Hot Tea: When Your Lab AI Starts Asking the Questions

Open AI: The 10-Gigawatt AI Power Play

OpenAI: The $2B Open-Source AI Gambit

Final Spots Available. Register Instantly!

NVIDIA's Gigawatt AI Factories for Rubin

At the OCP Global Summit, NVIDIA has laid out its vision for the next generation of massive-scale AI infrastructure, moving beyond data centers to what it terms "gigawatt AI factories."

This vision is built on two key pillars: a new, ultra-powerful server architecture and a fundamental shift in data center power delivery.

1. The Vera Rubin NVL144: A Leap in AI Server Design

NVIDIA has unveiled the specs for its next-generation server, the Vera Rubin NVL144. This system is designed from the ground up for massive AI inference and advanced reasoning engines, with several key innovations:

Liquid-Cooled & Modular: It is 100% liquid-cooled for energy efficiency and features a modular design.

Faster Assembly & Service: A central printed circuit board midplane replaces traditional cables, speeding up assembly and maintenance.

Ecosystem Support: Over 50 manufacturing partners are preparing to build systems based on this open MGX architecture, ensuring widespread availability.

2. The NVIDIA Kyber Architecture: The 800-Volt Revolution

Perhaps the most significant shift is the industry-wide move to 800-Volt Direct Current (VDC) power infrastructure, a technology pioneered by the electric vehicle industry.

This move away from traditional 415/480 VAC systems is critical for scaling to gigawatt capacities and offers major benefits:

Massive Efficiency Gains: Transmits over 150% more power through the same amount of copper.

Reduced Material Use: Eliminates the need for 200-kg copper busbars per rack, leading to cost savings of millions of dollars and a simpler infrastructure.

Industry Adoption: Major players like Foxconn, CoreWeave, and Vertiv are already designing 800 VDC data centers, with Foxconn building a 40-megawatt facility in Taiwan.

The NVIDIA Kyber rack system is the successor to Oberon and is engineered to house a staggering 576 Rubin Ultra GPUs by 2027. Its "bookshelf" design, which stacks compute blades vertically, allows for unprecedented density and performance in a single rack.

At #OCPSummit25, we are offering a glimpse into the future of gigawatt #AIfactories.

✅ 50+ NVIDIA MGX partners are gearing up for the NVIDIA Vera Rubin NVL144 along with ecosystem support for NVIDIA Kyber, which connects 576 NVIDIA Rubin Ultra GPUs, built to support increasing

— NVIDIA Data Center (@NVIDIADC)

3:17 PM • Oct 13, 2025

3. Expanding the Ecosystem with NVLink Fusion

Beyond hardware, NVIDIA is strengthening its ecosystem. The NVLink Fusion program allows companies like Intel and Samsung Foundry to seamlessly integrate their custom silicon (CPUs and XPUs) into NVIDIA's data center architecture.

This reduces complexity for AI factories and accelerates the deployment of systems tailored for specific training and inference workloads.

🚀 NVIDIA keeps proving why AI is the new oil.

$NVDA closes at $188.32 (+2.82%), reaffirming investor confidence in the AI hardware narrative.

When the chips lead, the future follows. 🧠⚡️#NVIDIA#NVDA#AI#Stocks#TechInvesting#Semiconductors#TradingView#MarketInsights

— Mulondo Daniel (@mulondodaniel_)

11:01 AM • Oct 14, 2025

NVIDIA is no longer just selling GPUs; it is architecting the entire industrial-scale infrastructure for the AI era.

By open-sourcing its rack and server designs and leading a consortium of partners in power, cooling, and silicon, NVIDIA is positioning its standards as the foundation for the trillion-dollar AI factories of the future.

This coordinated push on hardware, power, and ecosystem is designed to ensure that the computational backbone for agentic AI and large-scale inference is built on NVIDIA's blueprint.

From Hypothesis to Discovery: The Power of AI-Driven Questioning in Research

A couple of years ago, artificial intelligence probably felt like a distant, futuristic concept to you, interesting, but not yet a tangible part of your daily research workflow. That likely changed for you in late 2022, just as it did for me, with the release of ChatGPT-3.5.

You might have thought, "Why not give it a try?"

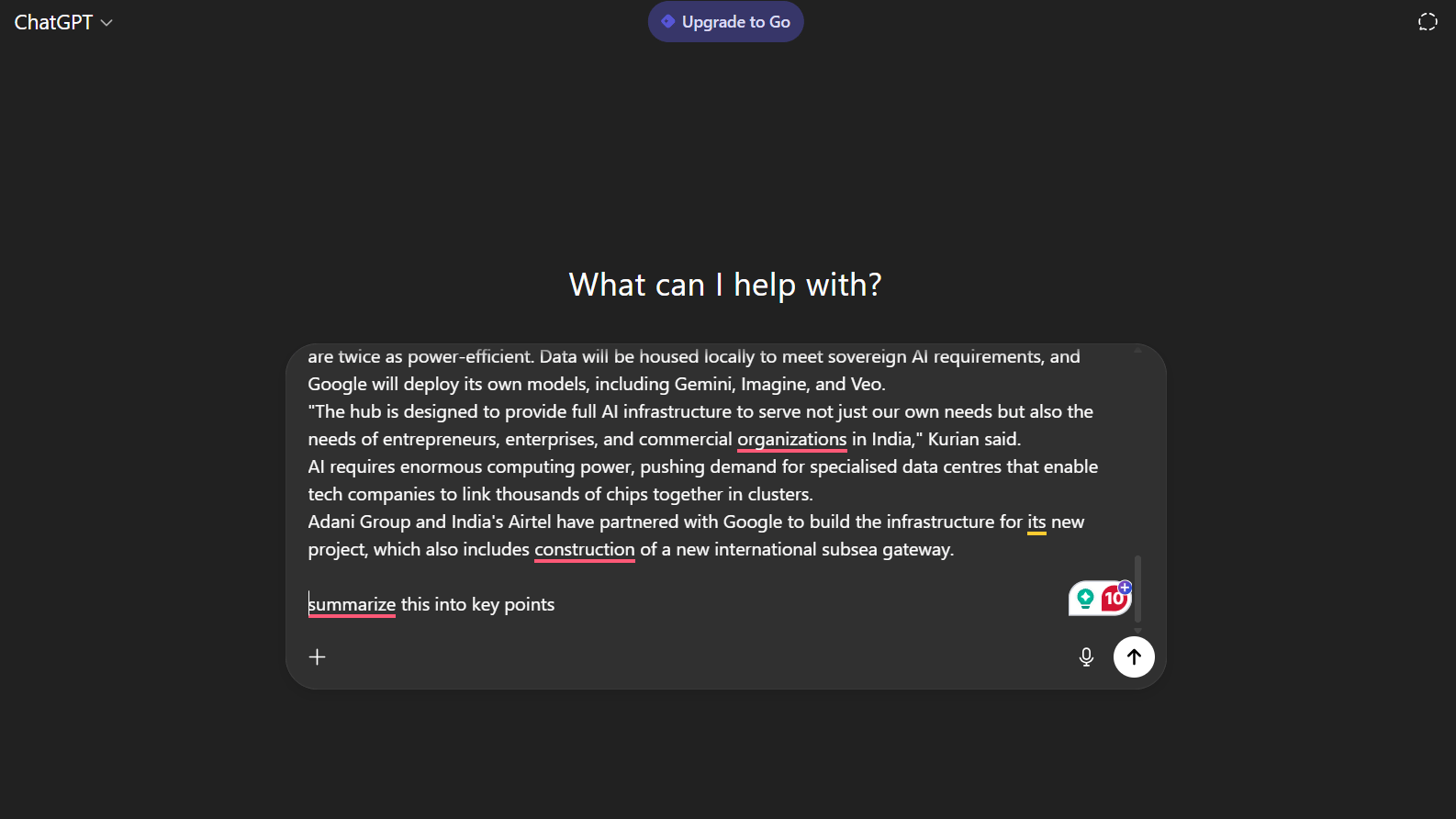

Your first real "aha!" moment with AI might have happened during a conference. Imagine a speaker mentioning an intriguing paper, and you, mid-talk, using an AI tool to query the PDF directly.

To your surprise, it pulls up the exact passage you need. From that point on, you find your stride, continually amazed by how much these tools can accelerate your work.

Let's be clear: AI isn't magic. It can't replace your critical thinking, your deep reading, or your hands-on lab work. But when you use it thoughtfully, it can make a profound difference in your productivity.

Today, you have dozens of AI tools at your fingertips, many with free accounts, and each excels in a different area. You'll find that some, like Writefull or ChatGPT, are brilliant at fine-tuning your writing, adjusting tone, tightening sentences, and improving clarity.

Others, like AskYourPDF or ChatPDF, can dissect dense scientific papers for you, pulling out key elements like the main hypothesis, sample size, or even the specific statistical methods used.

You might even start testing the same prompt across different platforms just to compare their interpretations. The variation can be surprising, and honestly, a little fun. Sometimes, you can even ask one AI to merge the results from others into a single, tidy summary.

For technical tasks, AI becomes your on-call, patient tutor.

Need to fix a command-line error or learn a new coding trick? It's there to guide you. Remember that time you needed a free C++ code editor and compiler?

A search that once took you over half an hour of sifting through forums can now be handled in seconds, with ChatGPT returning a curated list of options complete with pros and cons. That kind of time saved adds up quickly over the course of your research.

In your work, perhaps studying gene expression patterns to understand diseases like Alzheimer's, AI can revolutionize tedious processes. Mapping gene functions once meant hours of cross-referencing databases and papers.

Now, with tools like Perplexity AI or Elicit, you can get reliable, peer-reviewed summaries in seconds. And you already know the most critical rule: you always double-check the sources.

Which category of AI tool is most essential to your research workflow? |

That is non-negotiable.

Of course, you'll quickly learn that AI isn't perfect. It can sound incredibly confident while being completely wrong. It reflects the biases in its training data and, if you don't check it, can introduce subtle errors into your work.

This is why you must verify all outputs and stick to platforms that cite their sources. You have to constantly remind yourself that AI should assist your scientific method, not replace it.

Ultimately, the value you get from AI hinges on your ability to ask the right questions. Clear, well-crafted prompts yield the best answers. But it's your critical thinking, your deep reading, and your domain expertise that keep the results grounded and valid.

With curiosity and a healthy dose of skepticism, you can transform AI from a simple tool into a powerful collaborator, one that amplifies your human insight rather than replacing it.

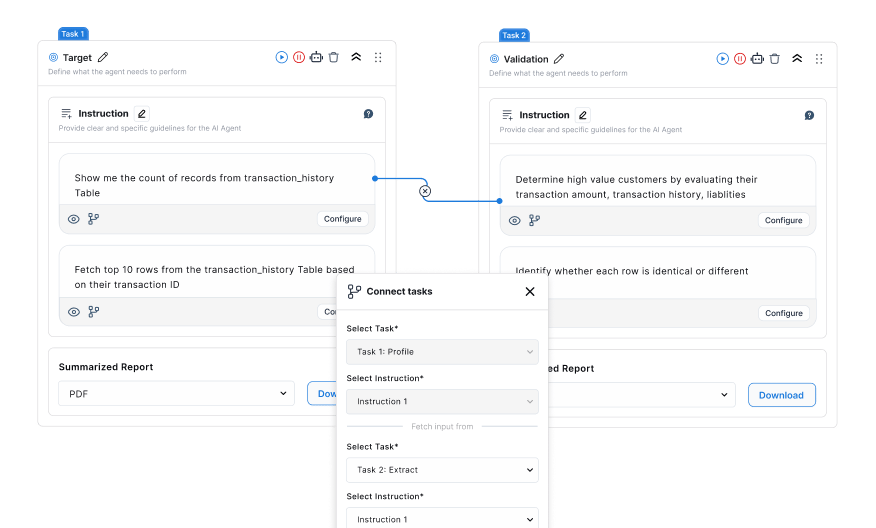

This principle of intelligent collaboration extends directly to the data that fuels your AI. The most perfectly crafted prompt is only as good as the data it acts upon. This is where DataManagement.AI becomes your essential partner.

Our platform ensures that your AI collaborator is working with a foundation of clean, governed, and trustworthy data, allowing your human insight to be amplified by reliable intelligence, not undermined by noisy or hidden data flaws.

The 10-Gigawatt Bet: OpenAI's Broadcom Pact for Next-Generation AI Tools

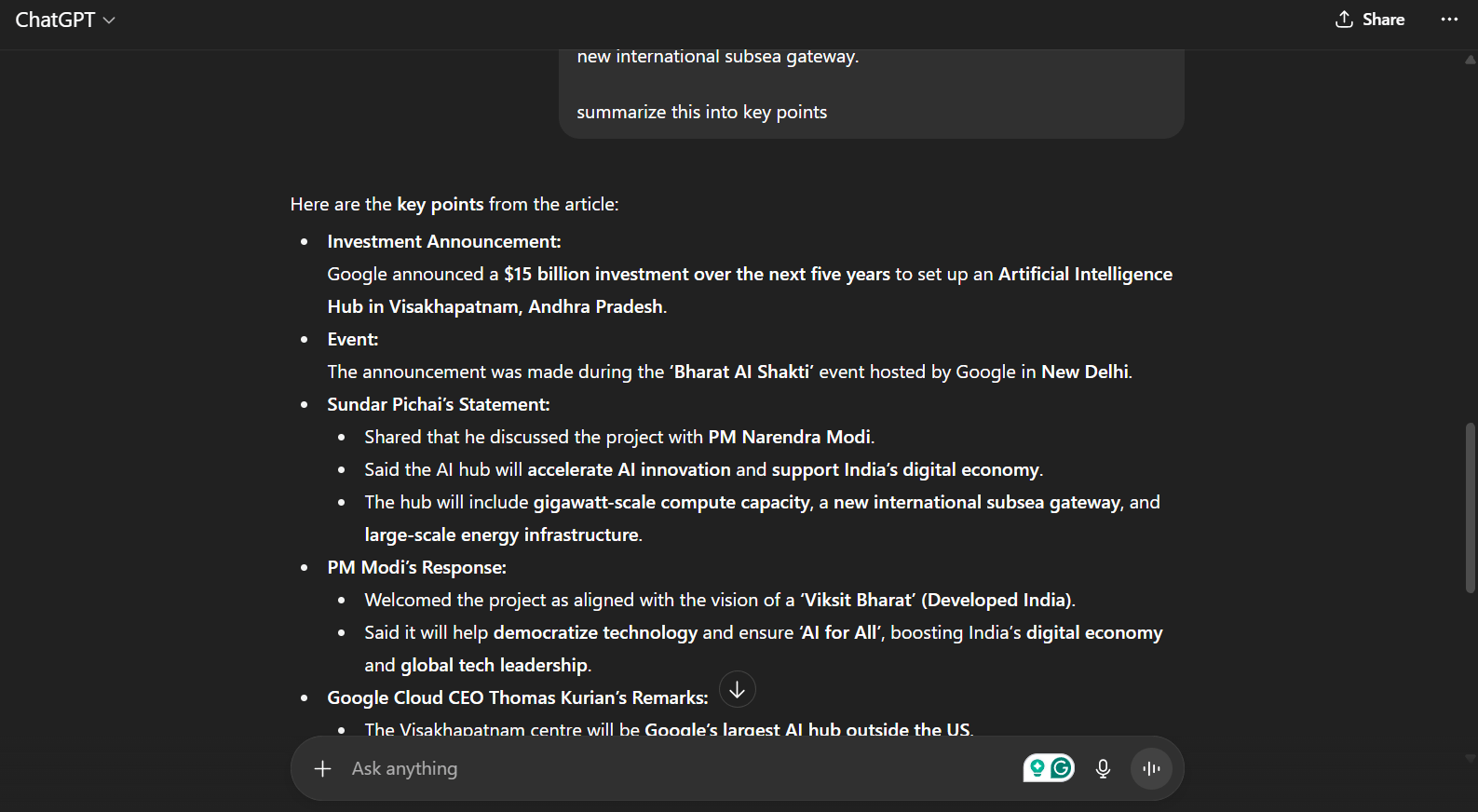

OpenAI has announced a strategic, multiyear partnership with semiconductor giant Broadcom to co-design and deploy custom AI accelerator chips.

This landmark agreement aims to add a massive 10 gigawatts of custom AI computing capacity, with deployment scheduled to begin in the second half of 2026 and be completed by the end of 2029.

This move marks a pivotal shift for OpenAI, as it seeks greater control over the fundamental hardware that powers its AI models.

The company stated that by designing its own chips, it can directly embed the lessons learned from developing frontier models like GPT-4 and ChatGPT directly into the hardware, potentially "unlocking new levels of capability and intelligence."

OpenAI and Broadcom will co-develop systems that include accelerators and Ethernet solutions from Broadcom for scale-up and scale-out.

Learn more: brcm.tech/3ISnczP

— Broadcom (@Broadcom)

4:08 PM • Oct 13, 2025

Key Insights of the Partnership

Custom Co-Design: The AI accelerators will be designed by OpenAI and brought to life in partnership with Broadcom.

Ethernet-Centric Scaling: The new computing racks will be scaled entirely using Broadcom's Ethernet and networking solutions, moving away from more proprietary interconnects.

Global Deployment: The infrastructure will be deployed across OpenAI's own data centers and those of its partners to meet soaring global demand.

This partnership is a critical piece of OpenAI's strategy to secure the immense computational power required for its future ambitions.

Sam Altman, CEO of OpenAI, framed the partnership as "a critical step in building the infrastructure needed to unlock AI’s potential."

Hock Tan, CEO of Broadcom, expressed excitement about co-developing the "next generation accelerators and network systems to pave the way for the future of AI."

This deal with Broadcom is part of a broader, aggressive compute build-out by OpenAI.

Just last month, the company announced a separate partnership with Nvidia involving a staggering $100 billion investment to deploy another 10 gigawatts of data center infrastructure using Nvidia's upcoming Vera Rubin chips.

Together, these partnerships signal that OpenAI is moving beyond relying solely on off-the-shelf hardware and is now building a bespoke, multi-vendor computational foundation to power the next decade of AI advancement.

Reflection AI Lands $2 Billion to Build an Open-Source AI Champion

In a landmark deal that signals a major shift in the AI landscape, Brooklyn-based startup Reflection AI has raised a staggering $2 billion in a funding round led by Nvidia, catapulting its valuation to $8 billion.

This represents a 15x increase in just seven months for the company, which is building what it calls "superintelligent autonomous coding agents."

Founded in early 2024 by two prominent DeepMind veterans, Reflection AI is positioning itself as a uniquely American, open-source challenger to the industry's giants like OpenAI and the rapid rise of Chinese open models like DeepSeek.

The company has assembled an elite team of about 60 researchers, many recruited from leading AI labs, to pursue its ambitious goal.

Today we're sharing the next phase of Reflection.

We're building frontier open intelligence accessible to all.

We've assembled an extraordinary AI team, built a frontier LLM training stack, and raised $2 billion.

Why Open Intelligence Matters

Technological and scientific

— Reflection AI (@reflection_ai)

3:11 PM • Oct 9, 2025

The Core Mission: An Open-Source Counterweight

Reflection AI's fundamental belief is that the future of advanced AI should not be controlled by a few corporations.

The company argues that this concentration of power stifles innovation and creates global dependency.

Its solution is not just another AI assistant, but a new class of fully autonomous agents capable of understanding, writing, and independently evolving complex software, a significant leap beyond today's coding copilots.

The Strategy and Backing

This ambitious vision is powered by what the company describes as a proprietary "frontier LLM training stack" designed to advance reinforcement learning and agent orchestration.

While technical details are limited, the promise has attracted a powerful consortium of backers, including Eric Schmidt, Citi, and 1789 Capital, with continued support from Lightspeed, Sequoia, and Zoom's Eric Yuan.

RT @achowdhery: I've spent years pushing the boundaries of pretraining—first as lead author on PaLM, then as a lead contributor on Gemini p…

— Misha Laskin (@MishaLaskin)

3:39 PM • Oct 9, 2025

With this unprecedented war chest, Reflection AI plans to scale its research aggressively, targeting a public release of its first open-source frontier language model in 2026.

This move sets the stage for a direct confrontation with the world's most powerful tech companies, but with Nvidia's backing and a clear mission to democratize frontier AI, Reflection AI has instantly become one of the most formidable and well-funded challengers in the global AI race.

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team