- Towards AGI

- Posts

- How Gen AI Search is Lifting Customer Satisfaction Scores?

How Gen AI Search is Lifting Customer Satisfaction Scores?

Happy Customers, Better Results!

Here is what’s new in the AI world.

AI news: The End of Search Frustration

Hot Tea: The Open-Source AI Advantage for Developers

Gen AI: Gen AI to Boost Productivity 15%

OpenAI: The 1-Gigawatt AI Data Centre Plan

Gen AI Search is Driving a Customer Satisfaction Surge

In today's digital customer service landscape, speed and accuracy are paramount. Customers expect instantaneous, correct answers on the first try, and failing to meet this standard leads to dissatisfaction.

This is where AI agents come in, promising to deliver near-instant, contextually relevant responses.

Achieving this requires the ability to quickly surface not just accurate, but contextually relevant information. This new AI-driven imperative has made knowledge management a core strategic priority for customer experience (CX).

In fact, a recent Metrigy study found that nearly 74% of companies now have a formal, documented knowledge management strategy for CX.

Generative AI-powered search is at the heart of this shift, rapidly moving from pilot programs to full-scale deployment. An overwhelming 85.8% of organizations are either piloting or have already implemented generative AI for CX knowledge.

One of the first randomized controlled trials testing whether GenAI boosts revenue, not just productivity.

It does.

A large, mature ecommerce platform, using older GenAI tools found most of them,

from customer service to marketing tools, led to large significant revenue boosts— Ethan Mollick (@emollick)

3:00 PM • Oct 15, 2025

The technology that makes this advanced search work is Retrieval-Augmented Generation (RAG).

RAG enhances large language models (LLMs) by allowing them to pull real-time information from internal company knowledge bases, leading to more accurate and informed responses. Already, 35% of companies use a RAG system, with many others planning to do so.

This technology is making a significant impact in two key areas:

For Agents: About 64% of companies provide their contact center staff with generative AI-powered search for AI-assisted guidance.

For Customers: Nearly 70% use generative AI to power virtual assistants for self-service.

The return on investment is clear and measurable. Companies tracking these initiatives report substantial improvements:

Customer satisfaction (CSAT) increased by an average of 19.7%.

Agent efficiency rose by an average of 22.1%.

First-contact resolution improved by 14.2%.

Revenue grew by an average of 11.6%.

However, successful implementation faces hurdles. The biggest challenges involve maintaining high-quality knowledge content and establishing a reliable data architecture.

This is that original MIT report that said 95% of AI pilots fail and which spooked investors across US Stockmarket.

The reports says, most companies are stuck, because 95% of GenAI pilots produce zero ROI, while a small 5% win by using systems that learn, plug into real

— Rohan Paul (@rohanpaul_ai)

10:44 PM • Aug 23, 2025

A major obstacle is that only 42% of companies have a single source of truth for their CX knowledge, with over half citing the difficulty of cleansing and standardizing their data as the primary barrier.

In conclusion, generative AI-powered search is now a critical component for CX optimization. To fully capitalize on its benefits, businesses must not only adopt the technology but also commit to the foundational discipline of robust knowledge management.

Is Open Source the True Engine of AI Innovation?

Few concepts inspire your innovation in software development like open source. This paradigm empowers you to participate, collaborate, create, refine, and support ideas in an open forum.

The resulting software is often more useful, versatile, and robust than proprietary projects.

You've likely seen the effect of open source with Linux, Kubernetes, and Docker. It's no surprise this approach now defines AI. Tools like TensorFlow and PyTorch have long accelerated ML, and now models like Llama and Mixtral are central to the ecosystem.

This powerful open-source toolkit provides the engines and blueprints for AI, but to build a truly reliable and trustworthy AI system, you need a disciplined approach to fuel your data.

This is where a platform like DataManagement.AI becomes essential; it acts as the critical governance and unification layer, ensuring that the data powering these open-source models is clean, consistent, and mastered, transforming raw information into a trusted asset that makes your AI initiatives more accurate and effective.

A 2025 McKinsey report found 63% of organizations use open source AI models, and you might be drawn to them for lower costs, faster development, greater customization, and freedom from vendor lock-in.

The Benefits You Can Expect

An AI model is software that uses algorithms trained on massive data sets to identify patterns and make decisions.

With open source models, you get public access to the code, parameters, and architecture, which you can freely use, modify, and redistribute.

The specific benefits for you include:

Lower Entry Costs: You can try different models and test ideas with less financial risk.

Code Integrity: You can examine the code for bugs, bias, or security flaws, ensuring greater accountability and trust.

Collective Effort: You benefit from a global community that often creates better, faster-updating models.

Flexibility: You can modify and optimize models for your specific projects and data.

Independence: You can use different models and tools without being tied to a single vendor's roadmap.

New AI report dropped, this time from Scale. To no surprise at all, Open Source is the preferred method for using generative models - even for commercial products and companies…

— anton (@abacaj)

12:24 AM • Apr 18, 2023

Challenges You Must Overcome

While the benefits are significant, you need to be aware of these technical challenges:

Technical Expertise: You will need an expert team, as support is often community-based and can be incomplete.

Data Quality and Availability: You must evaluate pre-trained models for biased or poor-quality data, and you might need to collect and curate your own data for fine-tuning.

Infrastructure Resources: You must be prepared for significant computing costs, especially when scaling, whether you use cloud or on-premises systems.

Integration with Other Systems: You need to plan how the model will communicate with your existing backend systems and other AI components.

Model Integrity: You must carefully evaluate the code for vulnerabilities, as open source software can be a target for malicious actors.

Licensing: You must examine the model's license to ensure it allows for your intended business use without legal restrictions.

Popular Open Source AI Models You Can Use

There are hundreds of open source models available for various tasks. Here are some popular examples, organized by category:

Audio Models: For text-to-speech, you can use Fish Speech or Mozilla TTS. For music generation, consider Uberduck.

Chat Models: For interactive helpdesks and support, look at DeepSeek, LibreChat, or Rasa.

Code Creation Models: To assist with vibe coding, you have options like CodeGeeX, DeepSeek-Coder-V2, and PR-Agent.

Embedding Models: For classifying unstructured data, consider Qwen3-Embedding or Google's EmbeddingGemma.

Frameworks and Libraries: For core AI development, your go-to tools are TensorFlow, PyTorch, and Scikit-learn.

Image and Vision Models: For computer vision or generative tasks, explore LLaVA, OpenCV, and Stable Diffusion.

Large Language Models (LLMs): For summarization, translation, and content generation, you can use Llama 3, Mistral 7B, or Gemma 2.

Moderation Models: To add safety guardrails, consider Llama Guard 3 or Mod-Guard.

Transcription Models: For converting speech to text, you have options like Whisper, DeepSpeech, and Kaldi.

The Gen AI Productivity Boom: A 15% Lift for U.S. Labor

In a recent CNBC interview, Joseph Briggs, a senior Goldman Sachs economist, detailed the profound economic potential of generative AI, forecasting that it could increase U.S. labor productivity by 15% over the next decade.

He emphasized that while current adoption is low, with only about 10% of U.S. firms actively using AI in production, the opportunity for growth is substantial.

Briggs pointed to early evidence from enterprises already implementing AI, which are reporting productivity gains of 25-30%. These case studies demonstrate the technology's tangible potential to automate tasks and enhance efficiency.

This August 2024 US survey of AI use confirms that AI is not just hype:

1) AI adoption is crazy fast by historical standards

2) It obviously useful to people in many industries. 25% people use GenAI at least 60 minutes a day at work, already

3) AI is being used for many purposes— Ethan Mollick (@emollick)

2:13 PM • Sep 24, 2024

He contextualized the massive current investment in AI by Big Tech companies, noting that its true economic impact must be measured relative to the overall size of the GDP.

The analysis also highlighted initial labor market shifts, such as a slowdown in hiring within the tech sector, indicating that AI integration is beginning to reshape workforce dynamics.

Briggs drew parallels to past technological revolutions, like the internet, suggesting AI represents a similarly foundational economic shift rather than a transient trend.

The core conclusion is that generative AI is poised to deliver a significant return on investment and drive broad-based economic expansion in the coming years.

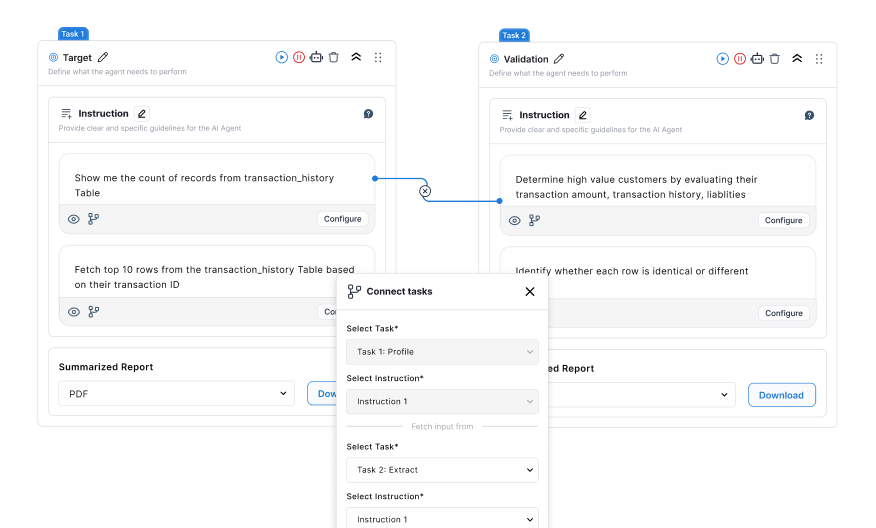

This is the challenge that TowardsMCP is built to solve, providing the essential tooling and standards to seamlessly connect AI models, data sources, and applications, enabling companies to move from isolated AI experiments to building robust, scalable, and autonomous AI agents that directly contribute to productivity and revenue growth.

OpenAI and Oracle's 1-Gigawatt 'Stargate' Plan

OpenAI, in partnership with Oracle and developer Related Digital, has announced plans to construct a massive data-center campus exceeding 1 gigawatt in Saline Township, Michigan.

This project is a key component of their "Stargate" initiative, a broader push to significantly expand U.S. artificial intelligence infrastructure.

what Microsoft execs really think abt Oracle's AI cloud plans w/ OpenAI:

— Amir Efrati (@amir)

7:27 PM • Oct 17, 2025

The multi-billion-dollar investment highlights the AI industry's immense and growing demand for computational power, fueled by the race to develop human-level or superior artificial intelligence.

While the exact cost was not disclosed, industry experts estimate that 1 GW of computing power, enough to energize approximately 750,000 American homes, can require around $50 billion in investment.

Scheduled to break ground in early 2026, the Michigan site is one part of a larger 4.5-GW expansion plan by OpenAI and Oracle.

""Oracle’s stock jumped by 25% after being promised $60 billion a year from OpenAI, an amount of money OpenAI doesn’t earn yet, to provide cloud computing facilities that Oracle hasn’t built yet, and which will require 4.5 GW of power (the equivalent of 2.25 Hoover Dams or four

— Lance Roberts (@LanceRoberts)

10:43 AM • Oct 27, 2025

When combined with six other planned U.S. sites, this effort will raise their total planned capacity to over 8 GW, representing a staggering total investment of more than $450 billion over the next three years.

OpenAI stated this move keeps its ambitious $500 billion, 10-GW commitment ahead of schedule.

This announcement follows OpenAI's recent corporate restructuring, which paves the way for the ChatGPT maker to move away from its non-profit origins and potentially pursue an initial public offering (IPO) with a valuation rumored to reach up to $1 trillion.

However, these soaring valuations and massive spending commitments from AI companies have sparked concerns that the industry may be inflating into a financial bubble.

The Michigan project is expected to be developed by Related Digital and will create over 2,500 union construction jobs. Peter Hoeschele, OpenAI’s Vice President of Industrial Compute, stated that the project will cement Michigan's role as a cornerstone for building the AI infrastructure essential for the next wave of American innovation.

Journey Towards AGI

Research and advisory firm guiding industry and their partners to meaningful, high-ROI change on the journey to Artificial General Intelligence.

Know Your Inference Maximising GenAI impact on performance and Efficiency. | Model Context Protocol Connect AI assistants to all enterprise data sources through a single interface. |

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team