- Towards AGI

- Posts

- GenAI Tsunami is Coming, and Cloud Teams Are on the Shore

GenAI Tsunami is Coming, and Cloud Teams Are on the Shore

Is Your Cloud Strategy Ready for the GenAI Revolution?

Here is what’s new in the AI world.

AI news: GenAI is Swamping Cloud Teams

Open AI: IBM’s Open-Source AI Gets ISO 42001 Nod

OpenAI: Huawei’s Open-Source AI Plan Revealed

Hot Tea: Chips and Models: Samsung + OpenAI

The GenAI Trilemma Facing Cloud Teams

The long-anticipated AI revolution is no longer on the horizon but is already placing a significant strain on corporate IT infrastructure.

The survey reveals that generative AI workloads are projected to increase by 50% within the next one to two years, with many leaders expecting this growth to be dramatic.

This surge is establishing AI as a central enterprise workload, but a critical gap has emerged: while demand is accelerating rapidly, the preparedness of cloud teams is lagging far behind, creating immense pressure on already overstretched personnel and systems.

A primary casualty of this situation is innovation bandwidth. Nearly half of the surveyed cloud and DevOps leaders report that their teams are too consumed with maintaining existing operations to focus on new projects. This is more than a technical hurdle; it is a strategic threat.

Alibaba Cloud AI metrics: 5x computing power growth, 4x storage growth year-over-year. Half of China’s major foundation model companies and Fortune 500 firms using GenAI are on their platform.

Scale matters in the AI race.

— Poe Zhao (@poezhao0605)

5:47 AM • Sep 24, 2025

Companies risk falling behind competitively if all their resources are dedicated to routine maintenance, leaving no capacity to develop future capabilities. This pressure is keenly felt by senior executives who find their teams constantly firefighting instead of advancing strategic AI initiatives.

Just a question.

How prepared is your organization's infrastructure for a significant increase in AI workloads? |

|

The problem extends to automation, where readiness is low. Only a minority of teams feel their automated systems are prepared to handle AI-driven demands.

The obstacles are not novel AI-specific issues but familiar infrastructure weaknesses, unreliability, skills shortages, and scalability limits that have persisted for years.

Generative AI acts as a magnifier for these pre-existing flaws; fragile automation will fail more quickly, and limited visibility will create more blind spots under the weight of AI workloads.

Long-standing challenges like cost management, lack of real-time visibility, and governance are also gaining new urgency. In the past, these inefficiencies led to budget overruns and delays.

In the AI era, they can bring innovation to a complete standstill. Governance, in particular, becomes a critical choke point as AI systems handle sensitive data, forcing companies to navigate a difficult path between stifling bureaucracy and reckless non-compliance.

Feel like you're playing catch up on gen AI?

Become a generative AI leader and boost your skills with our new certification and training. You don't need any experience, and the training is offered at no cost through our learning platform.

Learn more → goo.gle/47QTZ2n

— Google Cloud (@googlecloud)

3:00 PM • Sep 20, 2025

The solution, however, may lie less in new technology and more in foundational investments. When asked what would help most, leaders prioritized training and visibility over new tools or cost control.

This indicates that the core bottleneck is often the human element, a lack of expertise and clarity, rather than the technology itself.

Compounding this issue is fragmented ownership of AI tools across multiple departments, which slows decision-making. Centralizing responsibility with infrastructure teams could significantly improve agility.

Ultimately, the report underscores that success in the AI age will depend as much on operational readiness as on algorithmic sophistication.

The companies that will thrive are not necessarily those with the most advanced AI models, but those whose infrastructure teams and systems are resilient enough to withstand the coming surge.

With the pace of change accelerating, enterprises have only a matter of months to strengthen their fundamentals or risk being left behind.

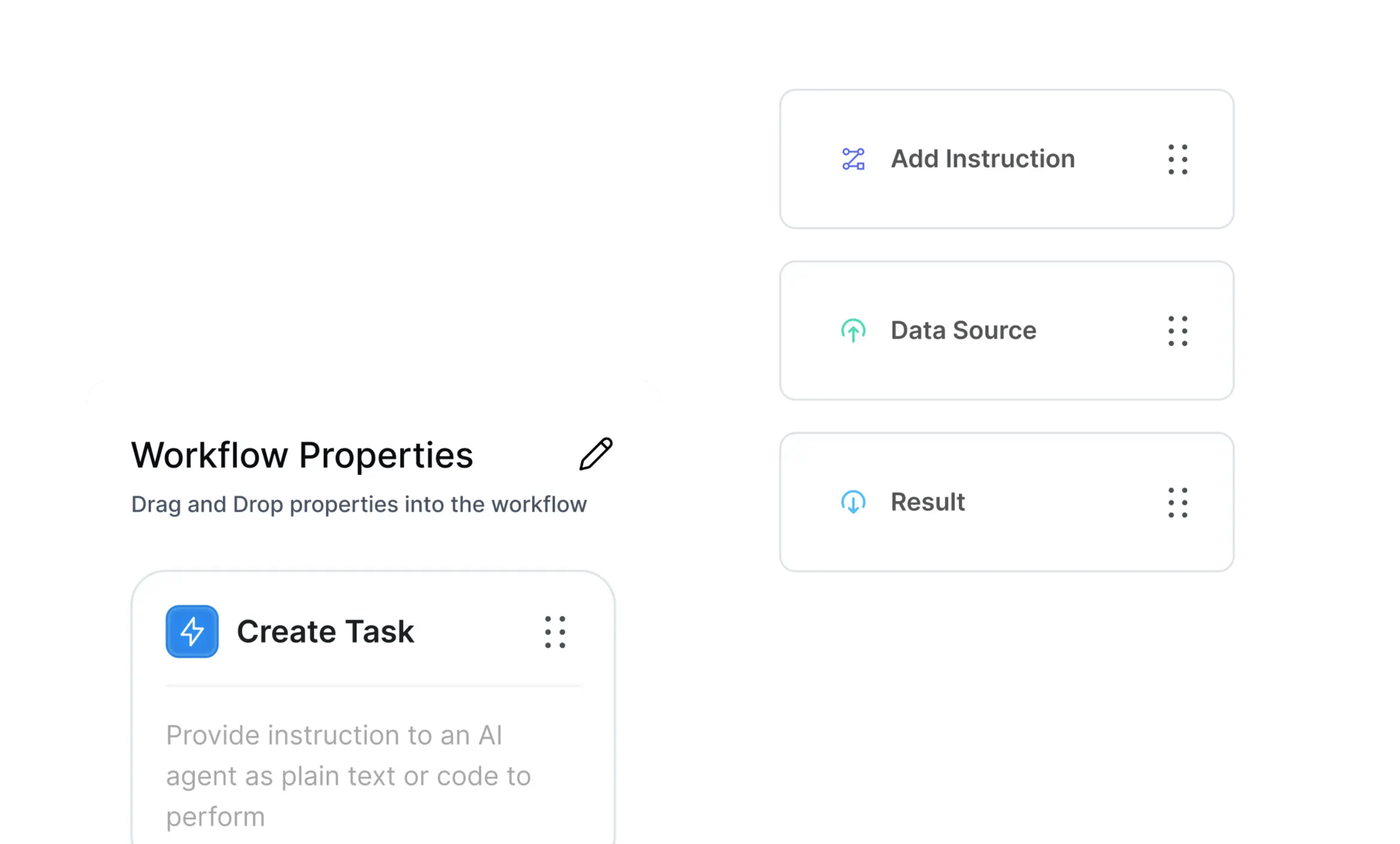

And DataManagement.AI provides a critical edge. We help enterprises future-proof their infrastructure by transforming chaotic data into a trusted, AI-ready resource.

Our platform automates the foundational work of data discovery, classification, and policy enforcement, freeing your stretched IT teams from manual firefighting and empowering them to focus on strategic innovation.

IBM’s Open-Source AI Model Framework Achieves ISO 42001 Certification

In 2023, the International Organization for Standardization (ISO) established the first global benchmark for artificial intelligence management with ISO/IEC 42001:2023.

This standard provides a framework for organizations to create and audit policies, processes, and safeguards for responsible AI development and deployment.

IBM has now achieved accredited certification under this standard for the AI Management System (AIMS) governing its Granite family of open-source language models. This makes IBM Granite the first major open model family to receive this certification, underscoring its commitment to open and responsible AI.

The models, which are permissively licensed, have also been highly ranked on Stanford’s Foundation Model Transparency Index for their openness.

🚨 IBM just dropped Granite 4.0 — hybrid Mamba/transformer LLMs built for enterprise efficiency.

✅ Up to 70% less RAM

✅ ISO 42001 certified & cryptographically signed

✅ Open-sourced + cheaper GPUsHere’s why it matters 👇🧵

— Futurepedia - Learn to Leverage AI (@futurepedia_io)

2:20 AM • Oct 3, 2025

The certification, conducted by the accredited body Schellman, is significant because AI operates differently from traditional software. Unlike deterministic programs, AI models generate probabilistic outputs, introducing unique risks that must be managed throughout their lifecycle.

ISO 42001 addresses this by providing a framework for ethics, accountability, and trust, which is increasingly important amid global regulatory scrutiny.

For enterprises, this certification offers confidence that IBM has implemented robust, measurable safeguards and transparent processes. This is crucial for deploying Granite in high-stakes, regulated industries like finance and healthcare.

For the AI industry at large, IBM’s achievement sets a precedent that responsible AI can be both open and innovative.

The certification process, completed in under three months with zero non-conformities, validates IBM's mature AI governance.

This is supported by practical measures such as a rigorous Data Management Framework for training data, digital signing of models for authenticity, and stress-testing against real-world threats.

Furthermore, IBM’s Granite Guardian provides an additional layer of open-source safety models to detect risks in AI interactions.

This milestone reinforces IBM's philosophy that enterprise AI requires enterprise-grade trust, combining transparency, security, and governance to set a new standard for the industry.

Huawei Lays Out Its Strategy for Open-Source AI in Keynote Address

At the recent Huawei Connect 2025 conference, Huawei made a major commitment to open-source by announcing it will make its entire AI software stack publicly available by the end of the year.

This strategy is a direct response to past developer feedback and is designed to build a more robust ecosystem around its Ascend AI hardware.

Acknowledging past challenges with its Ascend platform, Huawei's leadership candidly admitted to the friction developers have faced, using the rapid adoption of the DeepSeek-R1 model as an example that pushed their hardware's capabilities.

This frank admission frames the open-source push as a solution to improve usability and transparency.

The open-source plan has several key components with a firm deadline of December 31, 2025:

CANN (Compute Architecture for Neural Networks): This core software layer between AI frameworks and Ascend chips will have its compiler and virtual instruction set interfaces opened. This will give developers the visibility needed to optimize application performance, though the compiler itself may not be fully open-source.

Mind Series Toolchains: The practical SDKs, libraries, and debugging tools that developers use daily will be fully open-sourced. This will allow the community to inspect, modify, and enhance the development environment itself.

OpenPangu Foundation Models: Huawei will open-source its own foundation models, similar to Meta's Llama, providing a starting point for developers to build applications without training models from scratch.

Operating System Integration: In a flexible move, Huawei is open-sourcing its "UB OS Component," which manages supercomputer-level connections. This allows companies to integrate these capabilities into their existing Linux systems (like Ubuntu or Red Hat) as either source code or a plug-in, avoiding a forced migration to a Huawei-specific OS.

A critical part of the strategy is ensuring compatibility with popular AI frameworks like PyTorch and vLLM. This lowers the barrier for developers, allowing them to run existing code on Ascend hardware with minimal changes.

While the commitments and timeline are specific, important details remain unknown. The specific open-source licenses, which dictate how the software can be used commercially, have not been announced.

Jonathan Bryce, Executive Director of the Linux Foundation, highlights how open-source collaboration is accelerating innovation and building stronger digital ecosystems. At HUAWEI CONNECT 2025, he emphasizes Huawei's role in shared innovation and shaping an intelligent future.

— Huawei (@Huawei)

11:10 AM • Sep 22, 2025

The governance model for these new open-source projects is also unclear, leaving questions about how much influence the external community will have on their future direction.

For developers, the December 31st release will be the starting point for a crucial evaluation period. The quality of the initial code, documentation, and examples will determine whether a vibrant developer community forms around the platform or if it remains a vendor-led project.

The six months following the release will be key to assessing whether Huawei's open-source strategy successfully wins over developers and builds a competitive AI ecosystem.

The Ultimate AI Stack? Samsung's Hardware to Power OpenAI's Global Ambitions

OpenAI has entered into a broad strategic partnership with a consortium of Samsung companies, including Samsung Electronics, Samsung SDS, Samsung C&T, and Samsung Heavy Industries.

A special day in Seoul as we officially launch OpenAI Korea. With strong government support and huge growth in ChatGPT use (up 4x in the past year), Korea is entering a new chapter in its AI journey and we want to be a true partner in Korea’s AI transformation.

— Jason Kwon (@jasonkwon)

7:41 AM • Sep 12, 2025

The collaboration aims to accelerate the development of global AI data center infrastructure and explore future technologies, leveraging the combined expertise of all parties in semiconductors, cloud services, shipbuilding, and construction.

The key components of the partnership are as follows:

Samsung Electronics will act as a strategic memory partner for OpenAI, supplying advanced DRAM solutions to meet the massive projected demand for OpenAI's "Stargate" AI initiative. The company will also contribute its comprehensive semiconductor capabilities, including logic, foundry, and advanced chip packaging technologies.

Samsung SDS will collaborate with OpenAI on the design and operation of AI data centers. Furthermore, it has been appointed as a reseller of OpenAI's services in Korea, where it will provide consulting and management services to help local enterprises integrate OpenAI's models, specifically the ChatGPT Enterprise offering.

Samsung C&T and Samsung Heavy Industries will focus on an innovative area of the partnership: the joint development of floating data centers. This approach aims to overcome challenges of land scarcity and reduce cooling costs and carbon emissions. The companies will also explore related projects for floating power plants and control centers.

This landmark partnership is positioned to support South Korea's national ambition to become a top-three global AI leader. Additionally, the Samsung group is considering a wider internal rollout of ChatGPT to drive AI transformation across its own businesses.

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team