- Towards AGI

- Posts

- Gen AI Investment In ER&D To Jump To $4M Per Project At Scale

Gen AI Investment In ER&D To Jump To $4M Per Project At Scale

The Price of Transformation

Here is what’s new in the AI world.

AI news: Gen AI ER&D Costs Increase to $4M at Scale

Open AI: The World’s Fastest Open AI Model? K2 Think Says Yes.

What’s New: The Future of Cardiac Imaging

Hot Tea: OpenAI Opens Up ChatGPT with Developer Mode and MCP

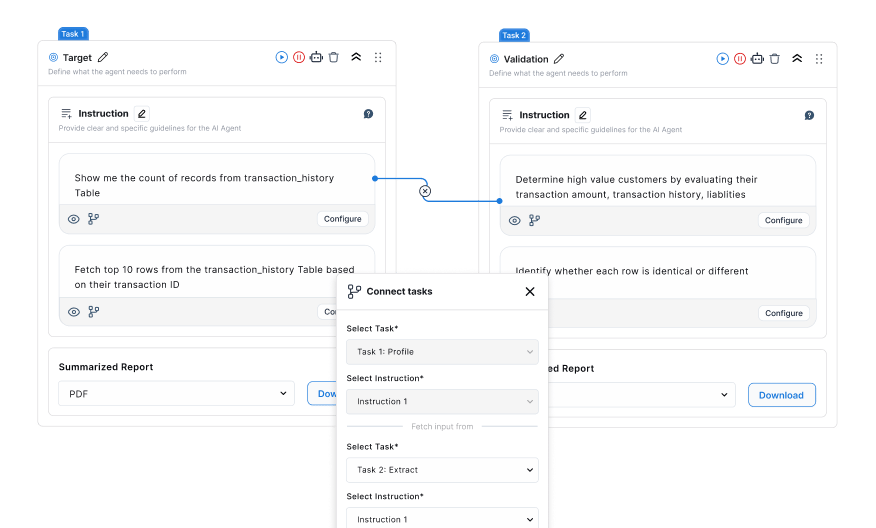

Build Without Limits: Introducing the Universal MCP Server for AI Integration

The Universal MCP Server for Enterprise is a secure, unified gateway that seamlessly connects your AI assistants to all enterprise data sources.

Adopted by Fortune 500 companies worldwide, our platform delivers intelligent, reliable access to your critical organizational data.

Key advantages include:

99.9% Uptime SLA for unwavering reliability

28+ Pre-built Connectors for rapid integration

Enterprise-Grade Security to safeguard your data

24/7 Dedicated Support whenever you need it

Build a more scalable and resilient AI infrastructure with the peace of mind that comes from a trusted, enterprise-ready solution.

Why Scaled Gen AI ER&D Projects Now Cost $4M?

Enterprise investment in Generative AI for engineering R&D (ER&D) is accelerating, with average pilot project spending projected to reach $4 million, up from $2.6 million for full-scale production, according to a new report.

The thought leadership paper by ISG-NASSCOM, introduced at the Nasscom Design & Engineering Summit 2025, indicates that while just over 10% of enterprise applications currently use Gen AI, this figure is expected to climb to 25% by year-end.

IT budgets allocated to Gen AI are also rising, from 4% in 2024 to over 6% in 2025.

Spending is distributed across applications and SaaS (36%), personnel (25%), infrastructure (21%), and managed services (18%).

Many companies are blending off-the-shelf tools with custom-built solutions, often partnering with service providers and Global Capability Centres (GCCs) in India to access specialized skills.

By 2028, 95% of enterprises will deploy GenAI in production — but which innovations deliver value?

Our Hype Cycle for GenAI reveals the models, engineering tools, and infrastructure that matter most for IT leaders to stay ahead of the curve: gtnr.it/3UAVx8P

#GartnerIT

— Gartner (@Gartner_inc)

9:04 PM • Sep 11, 2025

Efficiency continues to be the primary motivation for Gen AI adoption, with organizations already achieving more than half of their anticipated ROI.

Use cases span automated code generation, defect prediction, and simulation-led innovation across industries, including automotive, semiconductors, industrials, telecom, and healthcare.

The report emphasizes India’s strategic advantage in this transformation, supported by its strong engineering talent, diverse data resources, and government strategies such as the National AI Mission and National Semiconductor Mission.

UAE-Backed AI Model Debuts as World’s Fastest Open-Source Offering

A new open-source large language model (LLM) named K2 Think has been released, distinct from the similarly named Kimi K2 model from the Chinese AI lab Moonshot.

Developed through a collaboration between the Institute of Foundation Models at Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) and G42 AI.

Both based in the United Arab Emirates, K2 Think is already gaining attention for its claims as the “world’s fastest open-source AI model” and the “most advanced open-source reasoning system.”

Introducing Open Lovable v2 - an open source AI software engineer.

Just search for a website and agents will create a working clone you can build on top of.

Now powered by @firecrawl_dev search, @vercel Sandbox, and Kimi K2 on @GroqInc.

Fork the 100% open source example:

— Eric Ciarla (hiring) (@ericciarla)

3:57 PM • Sep 10, 2025

Key Features for Enterprise Consideration

High Speed with Moderate Size: Despite having only 32 billion parameters, far fewer than leading U.S. models, K2 Think delivers performance that matches or exceeds much larger models in technical domains like math, coding, and science. It reportedly generates 2,000 tokens per second per request, more than 10 times faster than typical GPU deployments.

Optimized for Complex Reasoning: Unlike general-purpose chatbots, K2 Think is designed for structured problem-solving, using step-by-step reasoning similar to human experts. It leads all open-source models in math benchmarks, with high scores on AIME, HMMT, OMNI-MATH-HARD, LiveCodeBench, and GPQA-Diamond.

Hardware-Driven Efficiency: Its speed is enabled by Cerebras’ Wafer-Scale Engine, which processes long responses (up to 32,000 tokens) in seconds, significantly faster than high-end GPUs. The model also uses speculative decoding and other optimizations to improve responsiveness.

Architectural Innovations: K2 Think incorporates six efficiency techniques: supervised fine-tuning with chain-of-thought, reinforcement learning with verifiable rewards, agentic planning, test-time scaling, speculative decoding, and Cerebras hardware optimization.

Fully Open and Commercially Permissive: Released under Apache 2.0, the model offers full transparency, including training data, weights, code, and inference tools. Enterprises can use, modify, and deploy it commercially without restrictions.

Part of a Broader AI Strategy: The release aligns with the UAE’s goal to become a global AI leader. Built with only 2,000 specialized AI chips, the project demonstrates how resource-efficient design can compete with far larger models.

Safety and Transparency: The developers shared internal safety evaluation results across categories like content refusal, robustness, jailbreak resistance, and cybersecurity.

K2 Think challenges the prevailing idea that AI performance requires enormous scale, offering a compact, efficient, and highly capable alternative that is both open and enterprise-ready.

What the fuck just happened 🤯

UAE just dropped K2-Think world’s fastest open-source AI reasoning model and it's obliterating everything we thought we knew about AI scaling.

32 billion parameters. That's it. And this thing is matching GPT-4 level reasoning while being 20x

— Ihtesham Haider (@ihteshamit)

6:44 PM • Sep 11, 2025

It signals a shift toward smarter, more transparent, and reproducible AI systems.

To fully leverage such advanced open models in real-world applications, enterprises need a robust data foundation that ensures seamless integration, governance, and scalability.

This is where DataManagement.AI delivers critical value, providing the secure infrastructure and intelligent workflows required to deploy, fine-tune, and maintain models like K2 Think efficiently.

With support for diverse data sources and enterprise-grade governance, DataManagement.AI enables organizations to harness cutting-edge AI while maintaining full control over their data lifecycle.

Explore how a unified data environment can amplify the impact of open AI technologies.

New Open-Source AI Tool Delivers Precise Echocardiography Analysis

A team of cardiologists has created an open-source artificial intelligence (AI) model designed to automate the evaluation of multiple key measurements from echocardiograms.

Their work, published in the Journal of the American College of Cardiology, highlights how this tool can help reduce the time-consuming and variable nature of manual echocardiographic analysis.

Echocardiography is a widely used cardiac imaging technique, but it relies heavily on expert sonographers to perform numerous precise measurements, a process prone to human variability and inefficiency.

How the electrocardiogram can identify previously undiagnosed structural heart disease with A.I. alone, better than cardiologists with A.I. support

@Nature@PierreEliasMD@timpotsMD

nature.com/articles/s4158…— Eric Topol (@EricTopol)

3:37 PM • Jul 16, 2025

While AI has shown promise in this field, most existing models are not open-source.

The new model, named EchoNet-Measurements, uses deep learning to automatically assess 18 standard echocardiographic parameters, such as left ventricular internal diameter, right ventricular basal diameter, and ascending aorta diameter.

It was developed using over 150,000 transthoracic echocardiography (TTE) studies from Cedars-Sinai Medical Center (2011–2023) and externally validated with more than 1,000 studies from Stanford Healthcare (2013–2018).

Results showed that EchoNet-Measurements achieved high accuracy across both linear and Doppler measurements. The researchers emphasized that its performance benefited from the use of one of the largest datasets of sonographer annotations to date.

By making the model open-source, the team aims to support broader clinical adoption, enhance reproducible research, and facilitate integration into larger AI systems for comprehensive echocardiogram interpretation.

ChatGPT Developer Mode Lands with MCP

OpenAI has launched a new beta feature called Developer mode for ChatGPT, offering Plus and Pro web subscribers expanded control over the model context protocol (MCP) client.

This mode grants read and write access to connectors, though OpenAI cautions that it is “powerful but dangerous” and intended for developers familiar with safe configuration and testing.

To enable Developer mode, users can navigate to Settings, Connectors, Advanced, Developer mode.

Once activated, developers can import remote MCP servers using protocols such as SSE or streaming HTTP, with optional OAuth or no authentication.

Within this mode, developers gain the ability to:

Toggle tools on or off directly from connectors

Refresh connector data

Use custom prompts to call specific tools during conversations

OpenAI advises developers to be explicit in their instructions, for example, specifying exactly which tool and connector to use, to prevent ambiguity or errors.

We’ve (finally) added full support for MCP tools in ChatGPT.

In developer mode, developers can create connectors and use them in chat for write actions (not just search/fetch). Update Jira tickets, trigger Zapier workflows, or combine connectors for complex automations.

— OpenAI Developers (@OpenAIDevs)

3:59 PM • Sep 10, 2025

The company also highlights the importance of carefully sequencing tool calls, especially when multiple connectors are involved.

For actions that modify data, ChatGPT will request user confirmation. OpenAI strongly recommends reviewing JSON payloads thoroughly before approving, as incorrect write actions could accidentally alter, destroy, or share sensitive information.

The system adheres to the readOnlyHint annotation; tools without this tag are treated as write actions.

Users can opt to remember their approval or denial decisions for specific tools within a conversation, though this should only be used with trusted applications.

Additionally, Developer mode offers visibility into tool inputs and outputs, supporting debugging and verification of tool behavior.

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How's your experience? |

Thank you for reading

-Shen & Towards AGI team