- Towards AGI

- Posts

- 31% of Your Team Is Undermining AI Efforts, Here's Why

31% of Your Team Is Undermining AI Efforts, Here's Why

Employee Pushback Is Killing AI ROI.

Here is what’s new in the AI world.

AI news: Why Your GenAI Strategy Is Failing?

What’s new: Fewer Hallucinations, More ROI

Open AI: China Outshines in Open-Source AI

Tech Crux: I tried the new ChatGPT Agent and its…..

OpenAI: UK Partners with ChatGPT-Maker OpenAI on AI Future

Hot Tea: OpenAI Targets Enterprises with $10M AI Consulting

Explore Gen Matrix Q2 2025

Uncover the latest rankings, insights, and real-world success stories in Generative AI adoption across industries.

See which organizations, startups, and innovators are leading the AI revolution and learn how measurable outcomes are reshaping business strategies.

Study Reveals 1 in 3 Employees Resist or Reject GenAI Tools

A recent survey by AI company Writer reveals significant employee resistance to generative AI adoption in workplaces, with concerning implications for implementation strategies.

31% of employees actively work against their company's AI strategy.

The number rises to 41% among Gen Z and millennial workers.

Common resistance methods include:

Manipulating performance metrics to make AI appear ineffective (10%).

Intentionally producing poor-quality AI outputs.

Refusing to use approved AI tools or complete training.

Using unauthorized AI applications (20%).

Inputting company data into public AI tools (27%).

Understanding the Motivation

Experts distinguish between malicious sabotage and legitimate concerns:

1. Job Security Fears

Employees interpret AI adoption as potential job replacement.

Some executives falsely attribute layoffs to AI efficiency rather than admitting to over-hiring.

2. Quality and Practical Concerns

Workers may avoid AI outputs due to legitimate quality issues.

Some find unauthorized tools more effective for their workflow.

3. Lack of Involvement

Employees resist when excluded from AI implementation decisions.

Many want a clearer understanding of how AI supports their roles.

“Our jobs are going to change. New tasks are going to come up.”

Our Global Head of People Development shares three GenAI skills all employees need for work. It’s about cultivating a mindset that sees GenAI as a partner.

Learn more: bit.ly/4lDp3Gl

#EngineeredToOutrun

— ABB (@ABBgroupnews)

2:58 PM • Jul 2, 2025

Industry Perspectives

Brian Jackson (Info-Tech Research Group):

"True sabotage involves deliberate harm, while quality concerns reflect professional judgment."Anonymous Retail Analyst:

"Subtle resistance like reverting to manual processes often stems from fear, not malice."HR Expert Patrice Williams Lindo:

"What appears as sabotage is often self-preservation in unstable work environments."

For Companies:

Legal liabilities from data privacy violations.

Binding contracts or intellectual property risks from unauthorized AI use.

Reduced ROI on AI investments due to poor adoption.

For Employees:

Potential civil and criminal penalties for deliberate sabotage.

Job security risks if resisting essential digital transformation.

Historical Context

Parallels drawn to 19th-century Luddite movement against industrial machinery.

Modern resistance takes digital forms like data manipulation rather than physical destruction.

Accenture had $1.2 billion in new bookings related to GenAI, and it has 69,000 people working in data and AI.

69,000 people who don't know anything about AI are helping the largest US companies with navigating the fast changing AI world.

One of the biggest grifts in the world.

— Rohit Mittal (@rohitdotmittal)

10:35 PM • Feb 10, 2025

Recommended Solutions

Transparent Communication

Clearly explain AI's role as a productivity tool, not replacement.

Separate factual AI benefits from layoff rationalizations.

Inclusive Implementation

Involve employees in AI adoption planning.

Create channels for feedback and concerns.

Upskilling Pathways

Demonstrate how AI enhances rather than replaces human work.

Provide training for AI-augmented roles.

Policy and Oversight

Establish clear guidelines for approved AI use.

Implement safeguards against data misuse.

While some resistance crosses into deliberate sabotage, most pushback reflects understandable concerns about job security, work quality, and lack of involvement.

Successful AI adoption requires addressing these human factors as diligently as the technological implementation. Companies that foster trust and inclusion will see better adoption rates than those taking a purely top-down enforcement approach.

Why This Matters for Leadership?

Highlights the importance of change management in digital transformation.

Reveals the risks of poor AI implementation strategies.

Provides actionable insights for smoother technology adoption.

Emphasizes the human element in technological progress.

This analysis suggests that the path to successful AI integration lies as much in addressing employee concerns as in deploying the technology itself. Organizations must balance innovation with empathy to realize AI's full potential.

My Take On New ChatGPT’s Agent: Hit or Miss?

As a tech enthusiast, I’ve spent the past few days testing OpenAI’s new ChatGPT Agent, and it’s dope. Forget the old ChatGPT that just answered questions; this upgrade transforms it into a true digital assistant that can act on your behalf.

Here are the two things I loved

1. It Doesn’t Just Talk, It Does

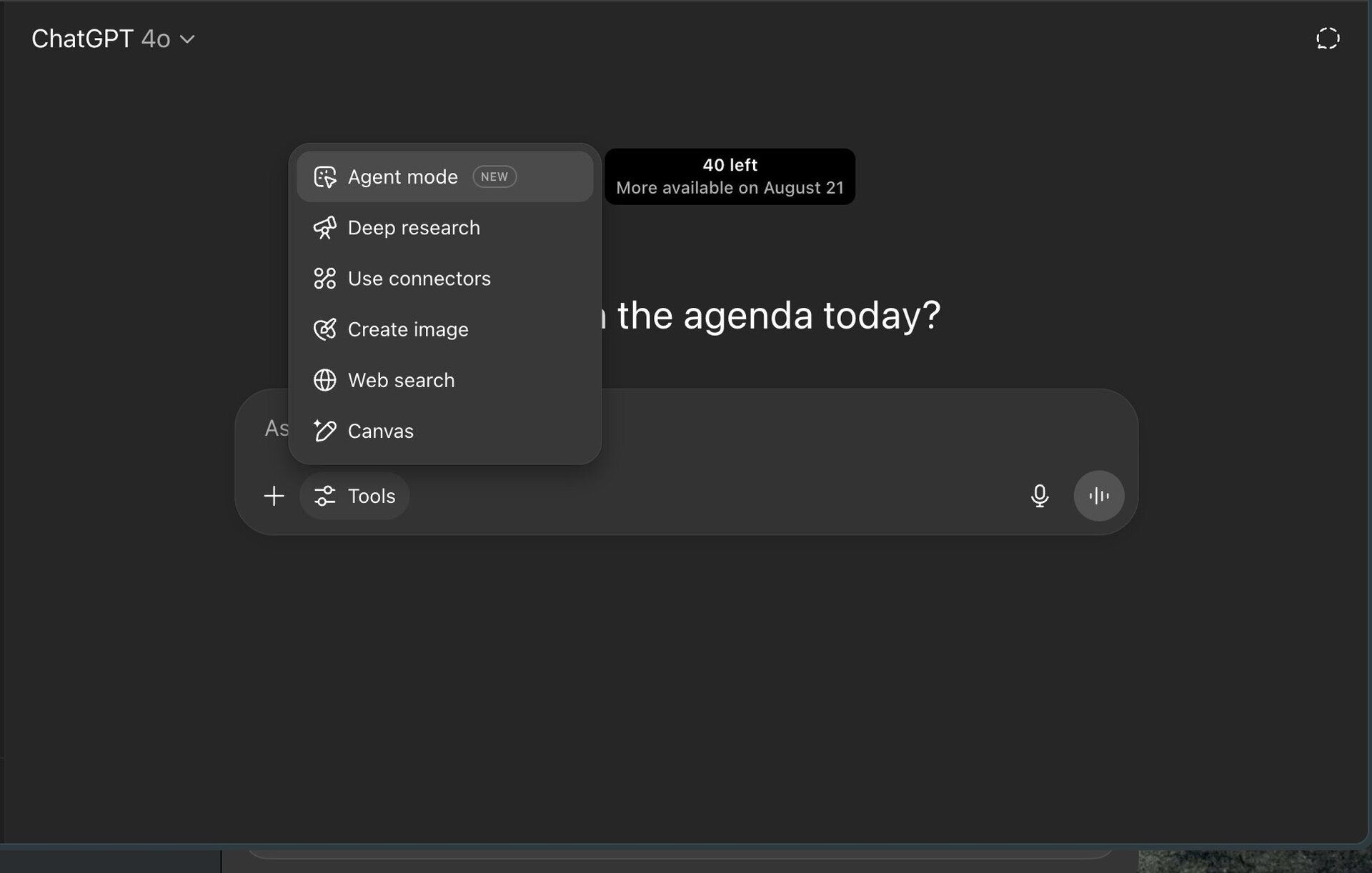

This is dark mode.

The biggest leap? ChatGPT Agent executes tasks autonomously. Need a vacation planned?

A meal-prep spreadsheet? Back-to-school shopping comparisons? Instead of just suggesting ideas, it handles the legwork, browsing websites, comparing prices, and compiling checklists. I watched in real-time as it:

Researched flights and hotels for a weekend trip.

Generated a grocery list with budget breakdown.

Formatted a presentation from my Google Drive notes.

2. Handles Complex Tasks Like a Pro

Tried in light mode.

As someone drowning in spreadsheets, I tested its data-crunching skills:

Analyzed a 1,000-row CSV in seconds.

Generated financial projections with Python scripts.

Formatted Excel tables better than I could manually.

OpenAI claims it outperforms humans in some benchmarks, and after testing, I believe it.

Thing I hate about it

While ChatGPT Agent is undeniably powerful, its pay-per-task pricing model raises concerns for heavy users.

No free tier means you can’t test capabilities before committing. Unlike ChatGPT’s free version, Agent mode requires a Pro/Team subscription ($20–$25/month) plus task fees, a steep barrier for casual users.

The Verdict

ChatGPT Agent isn’t just an upgrade; it’s a paradigm shift. By combining GPT-4o’s intelligence with real-world tools, OpenAI has created the closest thing to a true AI assistant I’ve used.

The End of AI Hallucinations? Specialized Models Show Promise

Recent disclosures from major financial institutions like Goldman Sachs, Citigroup, and JPMorgan Chase highlight increasing apprehension about AI risks in their 2024 annual reports. These concerns span:

AI-generated hallucinations.

Employee morale impacts.

Cybersecurity vulnerabilities.

Evolving global AI regulations.

Former Federal Reserve vice chair Michael Barr has warned that competitive pressures may drive financial institutions toward overly aggressive AI adoption, potentially amplifying governance and operational risks.

We now have a benchmark for AI hallucinations, and, based on OpenAI models, 3 useful findings:

1) Larger models hallucinate less

2) If you ask models their confidence in answers, high confidence = lower chance of hallucination

3) Where accuracy is low, answers you get vary a lot— Ethan Mollick (@emollick)

1:55 PM • Oct 31, 2024

The Hallucination Problem

Stanford University research reveals alarming error rates in general-purpose AI tools:

82% error rate in legal applications for tools like ChatGPT

17% error rate for specialized legal AI tools

The danger compounds when users act on inaccurate outputs without proper validation.

Root Causes of Hallucinations

A beautifully striking example of how AIs can hallucinate anything:

I ask ChatGPT for help in finding the chamois in the picture (there is none), so it hallucinates one, helpfully proposes to highlight it, and… it just adds a chamois to the image. 🤣

— Gro-Tsen (@gro_tsen)

12:05 PM • Jul 20, 2025

Several technical factors contribute to unreliable AI outputs:

Training Data Issues

Insufficient or low-quality pre-training data

Inadequate coverage of key terms and concepts

Model Architecture Limitations

Lack of self-awareness about knowledge gaps

Failure to indicate when responses lack reliable support

Retrieval-Augmented Generation (RAG) Challenges

Potential to distort original model knowledge

May create unnatural statistical relationships

Mitigation Strategies

To address these challenges, organizations should consider:

Focused AI Models

Developing domain-specific foundational models

Maintaining control over training data quality

Implementation Policies

Establishing clear guidelines for AI output usage

Implementing robust validation processes

Technical Safeguards

Constraining context augmentation to reinforce accurate relationships

Building interpretability into model responses

As financial institutions and other enterprises navigate AI adoption, they must balance innovation with risk management.

Specialized, focused models combined with rigorous validation protocols offer a promising approach to harness AI's potential while minimizing the risks of hallucinations and unreliable outputs. The key lies in developing AI solutions that are not just powerful, but also trustworthy and aligned with specific business needs.

Chinese AI Models Secure Top Spots Among Global Developers

A new benchmarking report from UC Berkeley's LMArena platform reveals China's growing leadership in open-source artificial intelligence, with four Chinese models topping the latest performance rankings.

Top Performers

Kimi K2 (Moonshot AI)

Launched July 2024 with two open-source versions

Praised for "humorous, natural-sounding" responses

Currently the highest-rated open-source LLM

DeepSeek R1-0528

Fine-tuned version of January's breakthrough model

Excels in multi-turn conversations and complex reasoning

Matches capabilities of OpenAI's proprietary o1 model

Qwen 3-235b (Alibaba)

235-billion parameter model released April 2024

Ranked third for "raw reasoning power"

Three Qwen variants made Hugging Face's recent top 10

Global Impact

The rankings demonstrate how China's open-source strategy is closing the AI gap with the U.S., particularly through:

Full public access to model weights and source code

Rapid iteration of specialized versions

Strong performance in human-like interaction

Kimi K2 tech report just dropped!

Quick hits:

- MuonClip optimizer: stable + token-efficient pretraining at trillion-parameter scale

- 20K+ tools, real & simulated: unlocking scalable agentic data

- Joint RL with verifiable + self-critique rubric rewards: alignment that adapts

-— Kimi.ai (@Kimi_Moonshot)

4:55 AM • Jul 22, 2025

Nvidia CEO Jensen Huang recently endorsed China's progress, calling these models "the world's best open reasoning systems" during his China visit. The achievement coincides with Nvidia's resumed H20 chip shipments following eased U.S. export restrictions.

China's open-source dominance challenges the traditional proprietary model approach of Western tech giants, potentially accelerating global AI development while raising new questions about intellectual property and security in the AI arms race.

With DataManagement.AI, you gain -

Seamless Data Unification – Break down silos without disruption

AI-Driven Migration – Cut costs and timelines by 50%

Future-Proof Architecture – Enable analytics, AI, and innovation at scale

Outperform competitors or fall behind. The choice is yours.

UK Partners with ChatGPT-Maker OpenAI on AI Future

Britain has entered into a strategic partnership with ChatGPT creator OpenAI to strengthen collaboration on AI security research and explore investments in the country’s AI infrastructure, including data centres, the UK government announced on Monday.

“AI will play a vital role in driving the transformation we need across the nation, whether that’s improving the NHS, removing barriers to opportunity, or boosting economic growth”.

“This progress wouldn’t be possible without companies like OpenAI, which are leading this revolution globally. This partnership will bring more of their work to the UK.”

Alongside this, OpenAI has launched a $50 million fund to support nonprofits and community organisations.

The UK government plans to invest £1 billion in computing infrastructure for AI development, aiming to increase public computing capacity by 20 times over the next five years.

With the United States, China, and India emerging as leaders in AI development, Europe is under pressure to accelerate its progress.

The UK government is not serious about regulating AI companies.

Despite the fact that OpenAI builds its products on British creators' work without permission, the UK government has announced a new partnership with them.

The announcement includes:

- More research collaborations— Ed Newton-Rex (@ednewtonrex)

5:19 PM • Jul 21, 2025

The partnership with OpenAI, whose collaboration with Microsoft had previously drawn attention from UK regulators, could lead to the expansion of its London office and further exploration of AI applications in areas such as justice, defence, security, and education technology.

OpenAI CEO Sam Altman praised the UK government’s “AI Opportunities Action Plan,” an initiative by Prime Minister Keir Starmer to position Britain as an AI superpower.

The Labour government, facing challenges in delivering economic growth during its first year in power and lagging in polls, believes AI could boost productivity by 1.5% annually, equating to £47 billion ($63.37 billion) in added value each year over the next decade.

OpenAI’s Exclusive AI Consulting Starts at $10 Million

OpenAI has started offering highly tailored artificial intelligence (AI) services priced from $10 million, according to a report by The Information.

With early clients such as the U.S. Department of Defense and Southeast Asian super-app Grab, this move puts OpenAI in direct competition with consulting giants like Palantir and Accenture.

The company, led by Sam Altman, is now delivering customised versions of its flagship GPT-4o model for enterprise and government clients. To achieve this, OpenAI is embedding forward-deployed engineers (FDEs) directly within client organisations.

OpenAI just became the most expensive consulting company in the world.

They’re now charging $10M+ to embed teams inside Fortune 500 companies and custom-build GPT-4o into their internal systems.

It’s no longer about shipping an API and calling it a day

Now it’s teams on

— Jarren Feldman (@jarrenfeldman)

10:07 PM • Jul 16, 2025

These engineers collaborate closely with internal teams to integrate GPT-4o into proprietary systems and build bespoke solutions, such as advanced chatbots and workflow automation tools.

This shift from foundational model development to enterprise-grade AI consulting marks a major evolution in OpenAI’s business strategy. Engagements start at $10 million but can scale to hundreds of millions for multi-year, complex projects.

The programme is partly led by OpenAI researcher Aleksander Mądry, who is focused on fine-tuning large language models (LLMs) to meet client-specific goals.

According to insiders cited by The Information, OpenAI envisions delivering such high value that contracts exceeding $1 billion could eventually become standard.

As part of this expansion, OpenAI has already hired more than a dozen FDEs this year, many of whom have experience at Palantir, a company well known for providing AI solutions to defence and intelligence sectors.

AI Talent Crunch & Competition from Meta

This development comes amid notable talent losses at OpenAI. Reports suggest Meta has successfully recruited at least eight employees from OpenAI and has approached several others.

Meta is aggressively building its "Superintelligence" lab, offering lucrative packages to attract top AI experts. In response, OpenAI has reassured its workforce that they will be rewarded, while stating that it has retained its “best” talent.

Towards MCP: Pioneering Secure Collaboration in the Age of AI & Privacy

Towards MCP is a cutting-edge platform at the intersection of privacy, security, and collaborative intelligence. Specializing in Multi-Party Computation (MPC), we enable secure, decentralized data processing, allowing organizations to analyze and train AI models on encrypted data without exposing sensitive information.

Multi-Party Computation (MPC) Solutions

Privacy-preserving collaborative computing for secure data analysis.

Enabling organizations to jointly process encrypted data without exposing raw inputs.

AI & MPC Integration

Combining MPC with AI/ML to train models on decentralized, sensitive datasets (e.g., healthcare, finance).

Blockchain & Decentralized Privacy

Secure smart contracts and decentralized applications (dApps) with MPC-enhanced privacy.

Custom MPC Development

Tailored MPC protocols for industries requiring high-security data collaboration.

Research & Thought Leadership

Advancing MPC technology through open-source contributions and AGI-aligned innovation.

Your opinion matters!

Hope you loved reading our piece of newsletter as much as we had fun writing it.

Share your experience and feedback with us below ‘cause we take your critique very critically.

How did you like our today's edition? |

Thank you for reading

-Shen & Towards AGI team